DevOps Dave here – Over the last year, I’ve spun up hundreds of AI images for everything from app UIs to whimsical D&D art. The AI image generation landscape in 2025 is richer (and trickier) than ever. In this guide, I’ll break down the top text-to-image “Best Ai Image Generator” platform of 2025 – including Midjourney, DALL·E 3, Stable Diffusion XL, Leonardo AI, Canva’s Magic Media, Runway ML, and Ideogram – and evaluate them on real-world performance: image realism, prompt control, speed, cost, licensing, and NSFW filters.

spoiler: the “best” generator depends on what you need). Let’s dive in!

Quick Comparison of Best Ai Image Generator

- Midjourney – Best-in-class image quality and artistry, producing hyper-realistic, detailed visuals that surpass most competitors in clarity and texture. It offers rich style options but requires a paid subscription (no perpetual free tier) and enforces strict content rules.

- DALL·E 3 – Most “intelligent” prompt understanding, thanks to OpenAI’s GPT-4 integration. It’s conversational and user-friendly, even handling text in images flawlessly. Accessible via ChatGPT (3 free images/day, then included with ChatGPT Plus), it’s cost-effective but has stringent filters limiting certain content.

- Stable Diffusion XL – The open-source powerhouse, offering unmatched customization and control. You can fine-tune models, run it locally for free, and generate images offline – giving you full privacy and ownership (no cloud snooping on your outputs). With enough tweaking, it produces photorealistic results, though getting consistent quality often requires expert prompting.

- Leonardo AI – Versatile creator’s platform, built for speed and control. It combines real-time canvas editing (draw and let AI finish your sketch) with multiple models (e.g. their custom Phoenix model) to yield lifelike, refined art. Generous free credits and affordable plans (from ~$10/month) provide high-quality outputs fast. Some advanced features (e.g. certain editing tools) are gated to paid tiers, but overall it’s a strong all-rounder.

- Canva’s AI Image Generator – Mainstream convenience, woven into a familiar design toolkit. Great for marketers and beginners, Canva’s Magic Media makes it easy to go from prompt to a polished graphic. It’s intuitive and accessible with a freemium model (free users get limited generations; Pro users up to 500/month). The trade-off: output quality is hit-or-miss (sometimes requiring touch-ups), and the system is heavily filtered for safe content.

- Runway ML – Multi-modal creativity with video prowess, plus solid image generation. Runway’s Gen-2 model can produce impressive images and even turn them into short videos. The platform shines in ease of use and rapid iteration – an intuitive interface and over 30 creative AI tools. For pure image work, Midjourney still leads in realism, but Runway offers an all-in-one studio (inpainting, video, audio, etc.). Free credits (25 images on the free tier) let you test it out, and paid plans use a flexible credit system (which can be a bit complex to estimate). NSFW content is strictly off-limits per Runway’s policies (they’ll ban nudity/violence in prompts).

- Ideogram – The text-to-image specialist, tackling a challenge others avoid: text generation in images. This newcomer (from a team of Google Brain alums) has “made text no longer taboo” in AI art. Want a logo or a sign with actual readable letters? Ideogram delivers gorgeous, high-res imagery with crisp typography baked in. It offers a generous free plan (20 images/day) albeit at slower speeds (~30–45s each). Paid plans (from $8/mo) unlock faster generation, a basic editor, 2K upscaling, and private outputs. While its image quality at best “matches anything on the market” (nearly reaching Midjourney-level fidelity), Ideogram lacks advanced editing (no inpainting/outpainting). Still, for branded visuals or memes with text, it holds the AI text crown in 2025.

Below, we’ll dive deeper into each platform’s strengths and weaknesses, followed by a comparison table and an FAQ addressing the top questions about Best Ai Image generators. By the end, you’ll know exactly which AI image generator fits your needs in 2025.

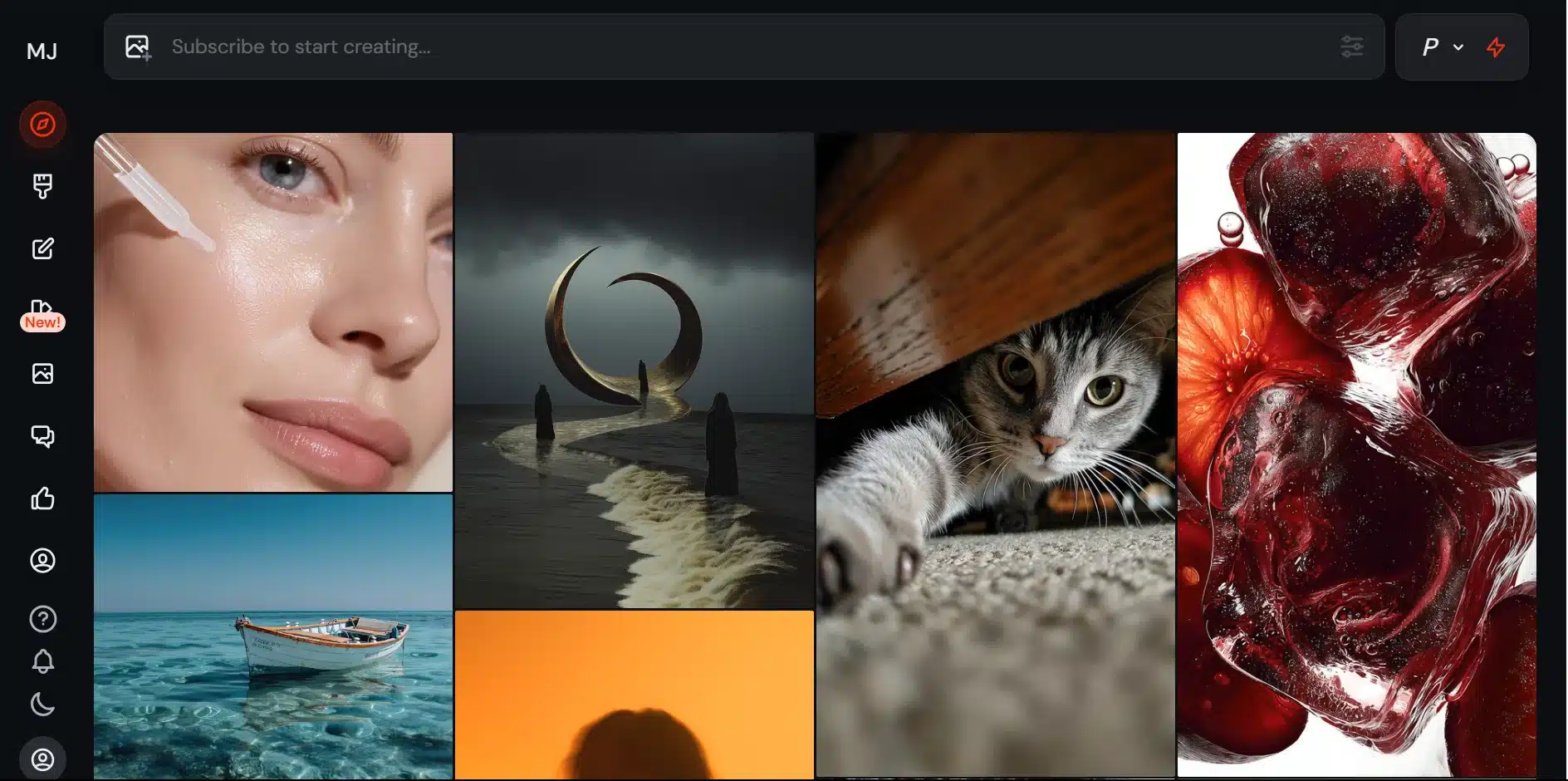

1. Midjourney (v6.x) – Best AI image generator for cinematic and photorealistic art

What it is: Midjourney is an independent research lab’s flagship AI image generator, famous for producing vibrant, hyper-realistic images from text prompts. Originally accessible only via a Discord bot, Midjourney now also has a slick web app (launched in 2024) for prompt crafting and community feed browsing. It’s beloved by digital artists, designers, and anyone who needs jaw-dropping visuals with minimal hassle.

Image Realism & Quality: Simply put, Midjourney’s output quality is top-tier. It generates images with exceptional detail – clarity, intricate textures, vibrant colors, and depth – that surpass most competitors. Whether you ask for a photorealistic portrait or a cinematic landscape, Midjourney often nails the lighting and composition to look like a pro photographer’s work. It tends toward a “beautiful” aesthetic by default (sometimes making images more dramatic or polished than reality), which most users love. Version 6 brought even more coherence and fewer anatomy errors (goodbye nightmare hands… mostly).

Prompt Control: Midjourney interprets natural language prompts very well and offers tons of stylistic control. You can invoke different versions/algorithms (e.g. the latest v6.1 for realism, or specialized modes like “niji” for anime style), adjust the “stylize” parameter for how artistic vs. literal the result should be, and even blend multiple images or provide a reference image to guide the generation. Midjourney excels at prompt fidelity – it generally captures nuanced instructions with striking accuracy, especially for well-described scenes. There are still occasional goofs if your prompt is extremely complex (e.g. specifying multiple distinct subjects or text – it might mash things up incorrectly), but overall it’s very adept at understanding your intent. One notable limitation: Midjourney still struggles to generate written text within images (e.g. a sign or T-shirt logo) – often producing gibberish lettering. It’s improved and sometimes gets short text right, but it’s not foolproof (more on this under Ideogram below).

Speed: Midjourney is fairly fast. On the default “fast” mode, a 1024×1024 image typically renders in ~15 seconds – and you get 4 variants per prompt by default, which is fantastic for quickly finding the look you want. Paying users on higher tiers get more “fast hours” and even “turbo” mode at times. There’s also a relaxed mode (unmetered but queued) if you generate thousands of images; casual users will rarely need it. The new web interface with version 6 introduced an interactive “zoom out” and pan feature, performing something like outpainting within seconds. In terms of throughput, Midjourney is cloud-hosted on powerful GPUs – you can’t match its speed easily on a typical home setup running Stable Diffusion. One downside: if the service is overloaded, you might wait in a queue (especially in relaxed mode). But in 2025, Midjourney’s infrastructure is robust; slowdowns are rare.

Cost: Midjourney is subscription-only (no unlimited free use). The Basic plan is $10/month for roughly 200 images; Standard ($30/mo) and Pro ($60/mo) offer more generation hours, higher priority, and features like private mode. There’s a 20% discount on annual plans. While there’s no permanent free tier, Midjourney occasionally opens up brief free trials (e.g. 25 images) for promotional periods. Compared to others, $10 for 200 images of this quality is actually great value – “more affordable monthly plans” than many competitors in this space. Just be aware that if you stop subscribing, you technically lose generation access (though you keep usage rights to past images).

Licensing & Rights: Midjourney’s terms grant paid users full ownership of their outputs to the extent possible under law. In practical terms, you can use your generated art commercially (print it, sell it, whatever) without needing permission. There’s a caveat for big companies: if your organization makes >$1M/year, you’re supposed to be on the Pro plan to use the images commercially. Also note that by default, images you create on basic/standard plans are public (visible in the community gallery and remixable by others). A Stealth Mode to keep images private is only available on Pro ($60) or higher. So, small creators have no real worries – you own your art. Just remember AI images aren’t copyrightable in many jurisdictions (they’re considered public domain by default), but in practice you’re unlikely to face issues using them in your projects.

NSFW Filtering: Midjourney maintains a strict PG-13 policy. The model and mods will block or ban prompts that include explicit sexual content, gore, extremist or hateful imagery, etc. You can’t generate pornographic or erotically explicit images (even artistic nudity is hit-or-miss and generally discouraged). It also started blocking some celebrity or artist name prompts to avoid legal trouble. The community guidelines are quite clear about disallowed content. Violate them and you risk a ban. For 99% of users (who just want cool art or concept designs), this isn’t a big issue – but if your use case is edgy art or unrestricted outputs, Midjourney is not the tool for that.

When to use Midjourney: When you need the highest image quality with minimal tweaking. It’s unbeatable for visual wow-factor from concept art, fantasy landscapes, and creative portraits to realistic photos for ads or covers, Midjourney’s outputs often look professionally crafted. It’s also great when you want to explore artistic styles (painting, Pixar-like 3D, watercolor, etc.) by just mentioning them in the prompt. However, if you require fine-grained control (like fixing a small detail in the image, or matching a very specific character’s look), Midjourney’s closed model can feel limiting – you can’t train it on new concepts yourself, and edits are limited to re-rolling or upscaling/variations. In those cases, a more open tool might serve you better.

TL;DR – Midjourney excels at turning imaginative prompts into stunning, print-ready images with minimal effort. It’s the reigning champ of AI art quality in 2025, albeit behind a paywall and with some content guardrails.

2. OpenAI DALL·E 3 – Best AI image generator for prompt accuracy

What it is: DALL·E 3 is OpenAI’s latest text-to-image model, introduced in late 2024 and now deeply integrated into ChatGPT. Unlike DALL·E 2 which you used via a standalone interface, DALL·E 3 works by simply chatting with ChatGPT – you describe the image you want in detail, and ChatGPT (powered by a special GPT-4 model codenamed “GPT-4 Vision”) generates the image for you. It’s a very conversational approach to image generation, great for guiding the AI step-by-step. DALL·E 3 can also be accessed through Microsoft’s Bing Image Creator and via an API for developers.

Image Realism & Quality: DALL·E 3 made a big leap in quality and coherence over its predecessor. It produces vibrant, highly detailed images that in many cases rival Midjourney’s. In side-by-side tests, Midjourney v6 tends to have a slight edge in photorealism and precision, but DALL·E 3’s images are often larger by default (1024×1024) and richly rendered with “atmosphere” and creative flair. For example, a prompt about “a futuristic city at sunset” might come out a tad more stylized with DALL·E – dramatic lighting, maybe a painterly touch – whereas Midjourney might stick closer to photo-real. DALL·E’s generations convey emotion and scene mood very well. However, it can occasionally introduce small inaccuracies or surreal oddities if the prompt is very complex or asks for something that defies its content policy (it might try to sanitize or alter the request, sometimes leading to off-target details).

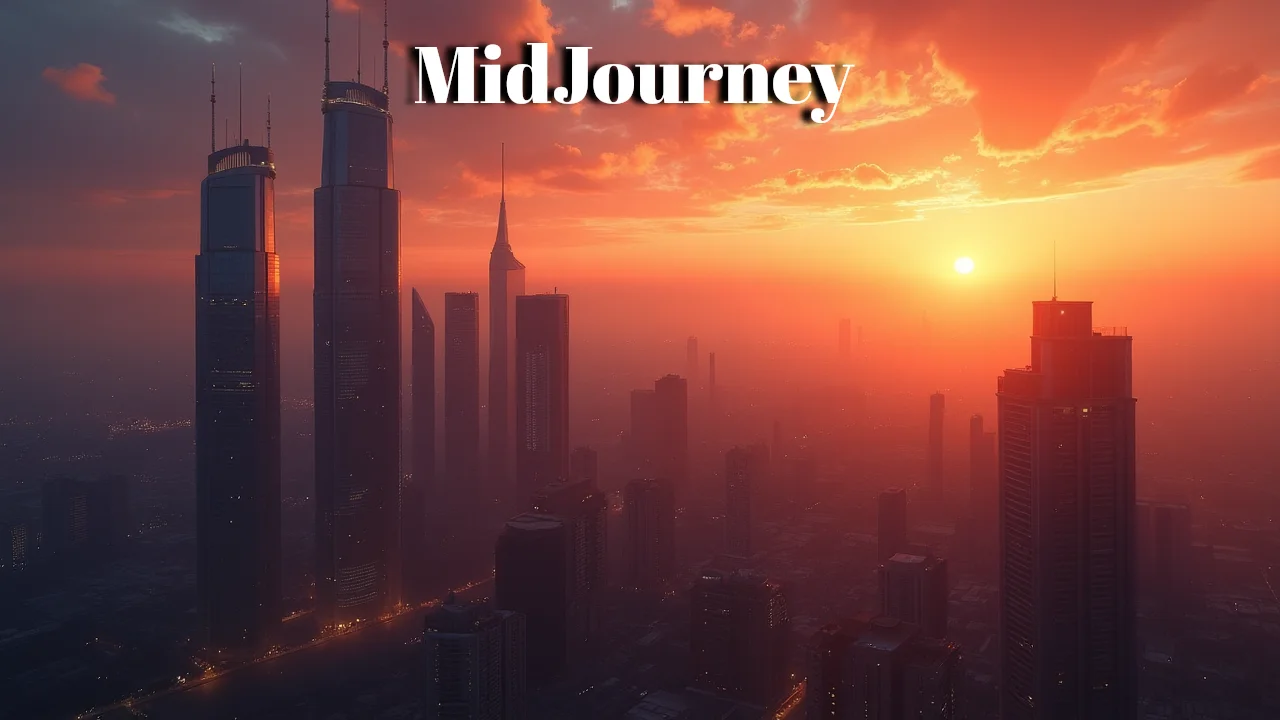

Image Comparison: openart DALL.E 3 vs Midjourney

note: The text in image “DALLE 3 (27 px)” inserted by openart DALL.E 3 itself, But on the other side the text “MidJourney” inserted by me manually just to distinguish in both images. The actual image size of DALL.E 3 was (9.68 MB) and Midjourney image size was (1.08 MB).

One area DALL·E 3 undisputedly outperforms others is handling text within images. If you prompt, “a store sign that says ‘Coffee Hub’ in neon lights,” DALL·E 3 will actually spell “Coffee Hub” correctly on the sign in most cases – something Midjourney or SDXL usually fail at. This is huge for creating posters, infographics, product packaging concepts, etc. OpenAI achieved this by tightly coupling the language understanding of GPT-4 with the image generation process, essentially guiding the model to draw text as instructed rather than gibberish. (Ideogram has a similar focus – more on that later.)

Prompt Control: DALL·E 3 (via ChatGPT interface) shines in prompt understanding and stepwise refinement. It’s arguably the “most intelligent” image generator when it comes to interpreting complex instructions. Because you interact with it through ChatGPT, you can do things like: give a long prompt or even a short story, and ChatGPT will distill it into an image (it might even create multiple images for different scenes described). You can then say, “Hmm, make the background brighter and add a dog in the foreground,” and ChatGPT will edit or regenerate the image accordingly using DALL·E’s editing capabilities. This iterative dialogue is very powerful for achieving a specific vision – you have an AI assistant that remembers context and can apply changes. In fact, DALL·E 3 introduced inpainting via text: you can select part of the generated image and tell ChatGPT what to change (“replace that tree with a lamp post”), and it will do it. This level of interactive editing is something Midjourney doesn’t natively offer (you’d have to use external tools for MJ images).

Additionally, DALL·E 3 in ChatGPT doesn’t require you to know special commands or parameters. You just describe what you want in plain English (or any language it supports), and it figures it out. Under the hood, GPT-4 is translating your request into an optimal image prompt and perhaps doing safety checks. You can also ask ChatGPT to show you the prompt it’s using – often it’s adding useful specifics. If you want more direct control, you could bypass ChatGPT and call the DALL·E API with specific parameters (like aspect ratio or quality level), but the chat approach is what most users experience.

One limitation: DALL·E 3 doesn’t have explicit style presets or model versions to switch between (unlike Midjourney’s modes). You can of course specify an art style or reference artist in your prompt, but there’s no slider for “more artistic vs more literal” apart from how you phrase your request. In practice, ChatGPT’s guidance makes DALL·E follow prompts very literally (it’s known for excellent prompt adherence) – sometimes to a fault, as it might include unwanted text or odd details if your prompt was too verbose. Still, if Midjourney is like a talented artist with its own vision, DALL·E 3 is more like a diligent illustrator who follows your script exactly.

Speed: Image generation via ChatGPT (DALL·E 3) is slower than Midjourney in my experience. It often takes about 30–60 seconds to produce 4 images, especially if the server is busy. This is because the system is using a large transformer model (GPT-4) to process the prompt and perhaps guide the image creation (reportedly DALL·E 3 is an *“autoregressive” model tied to GPT, not a fast diffusion model). The result is great output fidelity, but slower generation. If you’re only making a handful of images, a minute each is fine. But generating hundreds of variants will feel sluggish compared to something like Stable Diffusion on a good GPU.

Bing’s implementation of DALL·E 3 sometimes feels faster (perhaps due to different settings or compute scaling), giving results in ~15 seconds, but with some quality trade-off. Via ChatGPT Plus, I’d set expectations around ~30s per request. One nice thing: free users get a few images per day, but on the free tier it might be throttled to even slower speeds or a queue. ChatGPT Plus ($20/mo) users get faster responses and higher rate limits.

Cost: Great news: DALL·E 3 can be used for free. If you have a free ChatGPT account, you can generate up to 3 images per day at no cost (the interface might say “you’ve hit the limit” after a few requests). For most casual needs, that’s fine. If you need more, a ChatGPT Plus subscription is $20/month – and that gives you unlimited DALL·E 3 generations (within “fair use” limits) along with GPT-4 access. Essentially, Plus members can spam images quite liberally – it “significantly raises the daily limits” (I’ve generated dozens in a day with no issue except fixed ratio because chatgpt plus image creation doesn’t offer to select image ratio ). There is also an OpenAI API option: developers (or power users using third-party apps) can pay per image. The pricing is about $0.04 per image at the default 1024px resolution, with higher resolutions costing more. However, through ChatGPT Plus you don’t pay per image – it’s just the flat subscription. Microsoft’s Bing Chat offers DALL·E 3 image creation for free as well, with some daily cap and with ads.

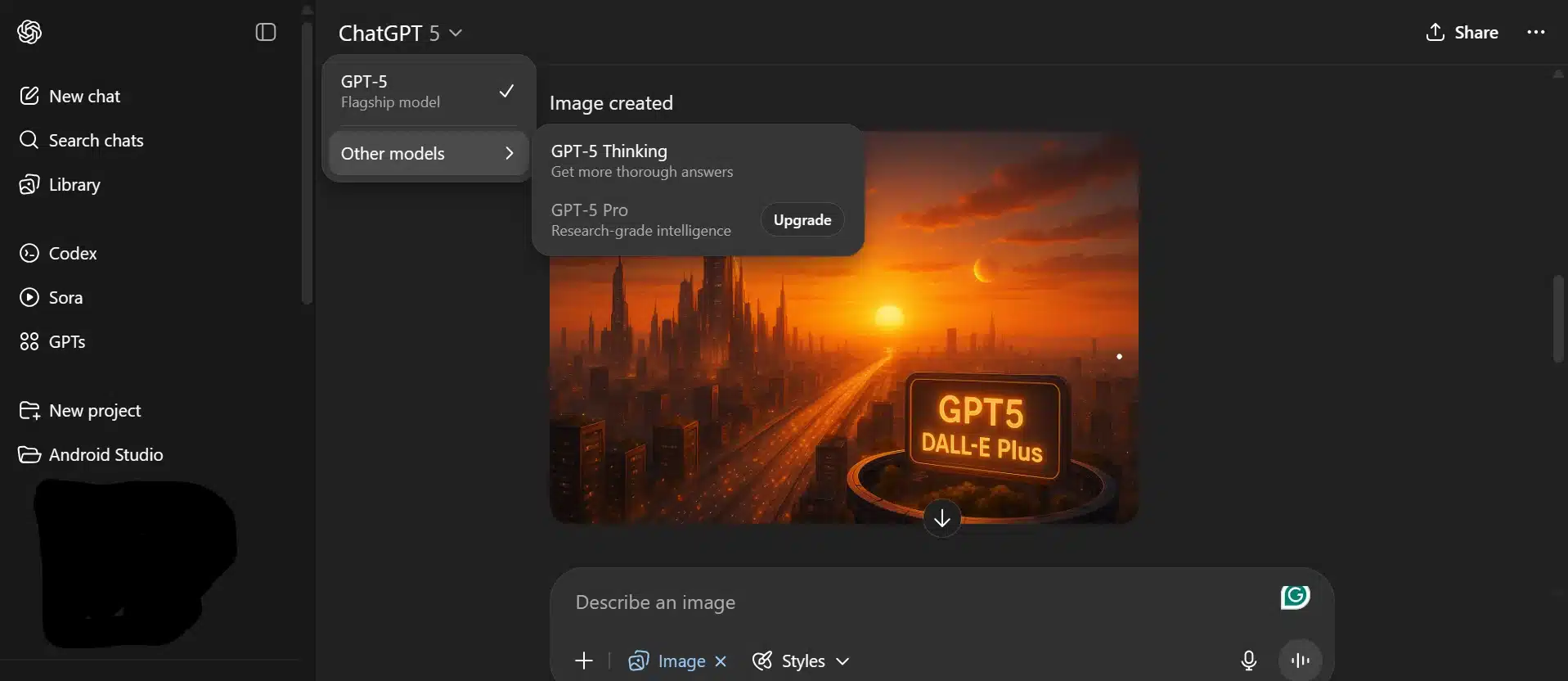

Image Comparison: Bing DALL.E 3 (free) vs chat-gpt5

Bottom line: DALL·E 3 is the most cost-flexible option. Free if you only need a few pics, or effectively $20/mo for unlimited (plus the entire ChatGPT service). Compared to Midjourney’s $10 for 200 images, DALL·E via Plus is a steal if you need volume – truly “wins in terms of cost”.

ChatGPT Plus also gives you GPT-4o, GPT-4.5, and 4o-mini — top AI writing models for fast, accurate content creation alongside DALL·E 3 image generation.

Licensing & Rights: OpenAI’s policy is that you own the images you create with DALL·E, and you are free to use them commercially. They also notably offer indemnification for enterprise users – meaning if you’re a business using DALL·E and someone sues over an output (say a copyright claim), OpenAI might cover the legal risks. This reflects their confidence that DALL·E outputs are legally safe to use. Indeed, DALL·E 3 was trained with a lot of careful filtering (e.g. avoiding known artist styles upon request, perhaps).

One catch: DALL·E’s content policy is quite restrictive. If your prompt asks for a living person’s face or a trademarked logo or anything potentially infringing, it will refuse. This reduces the chance you accidentally make something that could get you in legal trouble. But it also means creative freedom is limited (no funny celebrity cartoons, etc.).

For most cases, assume you can use DALL·E images just like stock art or your own art. OpenAI does encourage you to add a disclosure that it was AI-generated if used in public/media, but it’s not a legal requirement, more an ethical guideline. If you use the API, you must credit that images are AI-generated.

NSFW & Content Filtering: DALL·E 3 is very strictly filtered. It will not produce sexual or pornographic content (even relatively innocuous nudity often triggers a refusal). It avoids excessive gore or violence. It has filters against hate symbols, extreme political propaganda, etc. Sometimes it even blocks prompts that mention certain sensitive concepts, even if you intended something else (the filter can be overzealous – e.g. users found words like “blood” or “gore” or certain weapon terms might be disallowed). Also, DALL·E 3 explicitly prevents generating images of real people – you can’t properly get a celebrity likeness; it will either refuse or produce a distorted non-identifiable face. This is to avoid deepfake problems.

So, if you need NSFW or truly unfiltered outputs, DALL·E is not the tool. It’s arguably the most constrained of the bunch in content policy, along with Canva’s (which also won’t do NSFW and has similar restrictions). This is the flip side of being responsible and business-friendly – “policy restrictions may limit creative freedom” as one review put it.

When to use DALL·E 3 (ChatGPT): Use DALL·E 3 when you want a very specific image and might need to iterate in detail. It’s like having a patient design assistant: you can type “Make the sky a bit more orange and add a few birds” and get an updated image. That back-and-forth is golden for designers who know what they want. It’s also ideal for tasks involving written text in the image – e.g. marketing materials with slogan text, or UX mockups with legible labels – because DALL·E can actually do the text correctly. And if budget is a concern, DALL·E’s free/Plus options are hard to beat.

However, if you just want one perfect image out of the box and don’t care to iterate or converse, Midjourney might give a slightly more stunning result on the first try. Also, Midjourney offers more in terms of style selection and community discovery (DALL·E has no public gallery or community prompts to get inspired from – it’s a solo experience within ChatGPT).

Think of it this way: Midjourney is like an art studio you walk into and immediately see amazing art on the walls (community showcase) and can create something beautiful but with a bit of its own style. DALL·E is like a private workshop where you instruct an AI artist step-by-step to craft exactly what you envision, with guardrails on content.

3. Stable Diffusion XL – Best AI image generator for open-source customization

What it is: Stable Diffusion XL is the latest generation of the open-source image model released by Stability AI (and collaborators). Being open-source, it’s available on many platforms – you can run it on your own PC, on web apps, in the cloud, or integrated into other software. SDXL (released mid-2023) improved upon the original Stable Diffusion (which was 512×512 resolution) to natively generate at 1024×1024 with more fidelity and fine details. In 2025, SDXL and its community fine-tuned variants form the backbone of numerous custom models (for example, specialized anime generators, architecture design models, etc.). If Midjourney and DALL·E are closed gardens, Stable Diffusion is the wild forest – full of possibilities, some weeds, but ultimate freedom.

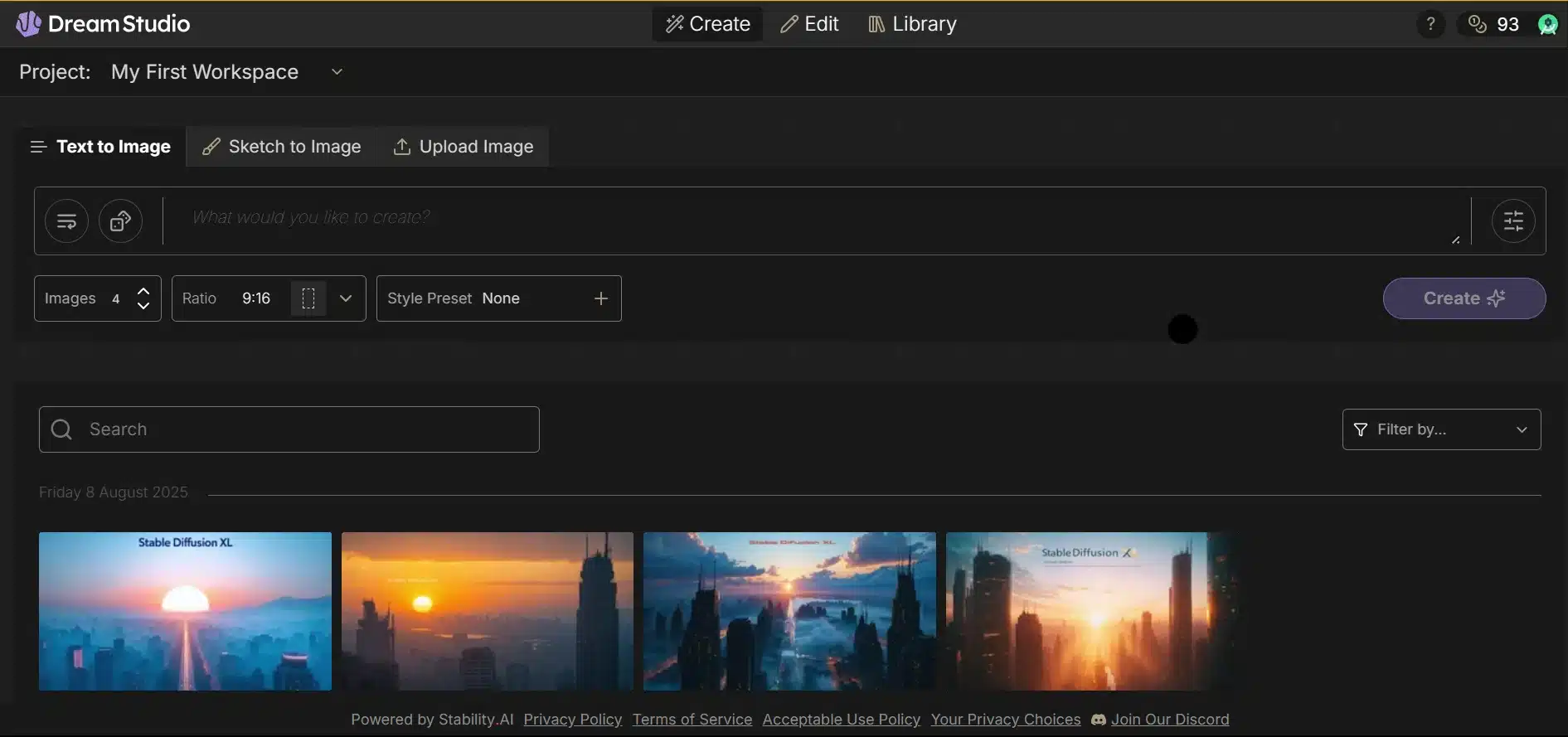

SDLX DreamStudio generated image preview

Image Realism & Quality: Out of the box, SDXL can produce very realistic images – especially with the right prompt. It’s capable of photorealistic faces, landscapes, and complex scenes that approach Midjourney-level quality. However, achieving that consistently often requires more prompt engineering or using community fine-tuned checkpoints. The SDXL base model tends to be a bit more neutral/generic in style compared to Midjourney’s “automatic drama” or DALL·E’s vivid palette. That’s by design: it’s a foundation you can push in any stylistic direction, but it may not wow on the first basic prompt. With the right prompt (and maybe a good negative prompt to avoid artifacts), SDXL can surprise you. For instance, it handles lighting and shadows better than older SD models, and has a decent grasp of human anatomy and faces. Some testers note Midjourney still has the edge in coherence – e.g. SDXL might more often mess up a hand or merge objects strangely if you ask for many elements – whereas Midjourney usually “cheats” such scenes to look plausible. That said, SDXL’s quality gap has closed a lot.

One huge advantage: you can swap in specialized models. Want the highest photorealism? Use a fine-tune like Realistic Vision or OpenJourney. Want Pixar style? Use a model tuned for that. The community has trained SDXL variants on all sorts of styles. Midjourney has its built-in styles, but Stable Diffusion has infinite because people share custom models on sites like CivitAI. In 2025 there are also SDXL 1.0 refinements and possibly SDXL 1.1 with minor improvements (plus fan forks). The bottom line: quality is excellent but variable – you have more control, so you can get either mediocre or amazing results depending on your skill and setup.

Prompt Control & Customization: This is Stable Diffusion’s strongest suit. You have ultimate control if you’re willing to get your hands dirty. Key aspects:

- Local Fine-Tuning: You can train the model on your own images using techniques like LoRA (Low-Rank Adaptation) or textual inversion. For example, you can teach SDXL what your specific human face looks like, or your company’s product, so it can generate that on demand – something impossible in Midjourney. This is how folks create AI art of specific characters or styles not in the base model.

- Negative Prompts: SDXL (via most UIs) lets you provide a negative prompt – things you don’t want to see (e.g. “blurry, low-resolution, text, watermark”). This helps avoid common problems.

- Generation Settings: You can tweak the number of inference steps, choose different sampling algorithms (Euler, DPM++ etc.) to trade off speed vs quality, adjust guidance scale (how strictly it follows the prompt vs. creativity), set aspect ratio freely, etc. All the dials are exposed.

- Multiple Models: Run a cute anime model for one job, a gritty realism model for another – all under the SD umbrella.

- Inpainting & Outpainting: Many SD interfaces (like Automatic1111 or Stability’s own DreamStudio) have built-in inpainting. You mask part of the image and prompt for changes, and the model fills it in. You can also outpaint beyond the original frame. These give Photoshop-like powers with AI, letting you incrementally refine an image.

- Integration with pipelines: Since SD is code-first, developers integrate it into design software, 3D workflows (e.g. generate textures), VR, etc. For instance, Adobe’s generative fill in Photoshop is conceptually similar to inpainting with a tuned SD (though Adobe uses their own model Firefly for licensing reasons).

All this means Stable Diffusion rewards tinkerers. As one comparison noted, “Stable Diffusion rewards those who tinker with deep control, while Midjourney offers painterly ease”. If you enjoy the process of coaxing the perfect image by adjusting prompts and settings, SDXL is amazing. If you prefer a one-shot magic output, it can do that too, but it might take more trial and error.

Speed: Running SDXL depends on your hardware or the service used. If you have a high-end GPU (say an NVIDIA 3090 or better), you can generate a 1024px image in maybe 5–10 seconds with 30–40 steps. If using an online service like Stability’s DreamStudio or NightCafe, it’s similarly quick (they allocate GPUs on the backend). Mobile apps or lesser GPUs might be slower (20–30 seconds per image or more). Generally, SDXL is fast enough for interactive use – maybe not as lightning-fast as older SD 1.5 (which could churn images in <5s on good hardware at lower res), but still good. Also, because you can batch-process locally, you could generate 10 images in one go and then sift through them. This is useful for exploration – albeit costs time and VRAM.

Compared to Midjourney, a single image generation is similar order of magnitude (a few seconds to a half-minute). However, Midjourney always gives you 4 compositions for each prompt by default. With SDXL, you’d have to run multiple prompts or use a script to get variations. One could argue Midjourney’s infrastructure might be better optimized for consistency and speed per dollar (since they hand-tuned their model for their servers). But SDXL’s speed is really only gated by how much compute you throw at it. On a supercomputer, it could be as fast; on your CPU, it will be very slow. The flexibility is yours.

One thing: generating very high resolution images is possible via SDXL + upscaling. E.g., you can generate 1024px and then use an AI upscaler (like ESRGAN or SD’s built-in upscaler) to get a 4K image. This two-step process is slower but yields print-quality outputs. Midjourney has an upscaler but limited to maybe ~1.5–2x. SD pipelines can upscale 4x or more, albeit with diminishing returns.

Cost: Free, free, free (if you want it to be)! The model is downloadable (though a 7GB file) and you can run it on your own hardware or a rented cloud machine. Many community UIs are free and open-source. If you don’t have the hardware, you can use cloud APIs or hosted websites. Stability AI’s official DreamStudio web app offers a limited free trial (e.g. some credits on signup) and then a pay-as-you-go credit system – roughly $10 gets you hundreds of generations. There are also third-party sites (some supported by ads or freemium models) where you can use SDXL at no cost for a set number of images per day.

For truly cost-sensitive scenarios or large-scale use, Stable Diffusion is the cheapest because you’re not paying a monthly fee for the model itself – only for compute. If you already have a gaming PC, you essentially generate images for electricity cost. This makes SDXL ideal for researchers, indie game devs, or anyone who might need thousands of images or wants to experiment without a meter running.

From a business perspective, SDXL’s open license means there’s no legal worry about using it commercially. (Though as Zapier noted, companies using Midjourney or Ideogram outputs haven’t faced issues either – still, with SD there’s not even a terms-of-service to consider, aside from ensuring no copyrighted material is directly reproduced.)

Licensing & Rights: All Stable Diffusion-generated images are effectively yours to use freely. Stability’s license doesn’t claim ownership of outputs. In fact, by US law the outputs likely aren’t copyrighted by anyone, which means they’re public domain – you can use them, and so could others if they had the exact image (but the chance of an identical image being reproduced by chance is astronomically low unless you share it). If you fine-tune a model on private data, obviously keep that model private if the data’s sensitive. But otherwise, there’s no content usage monitoring – if you run it locally, no one even knows what you generated, unlike cloud services.

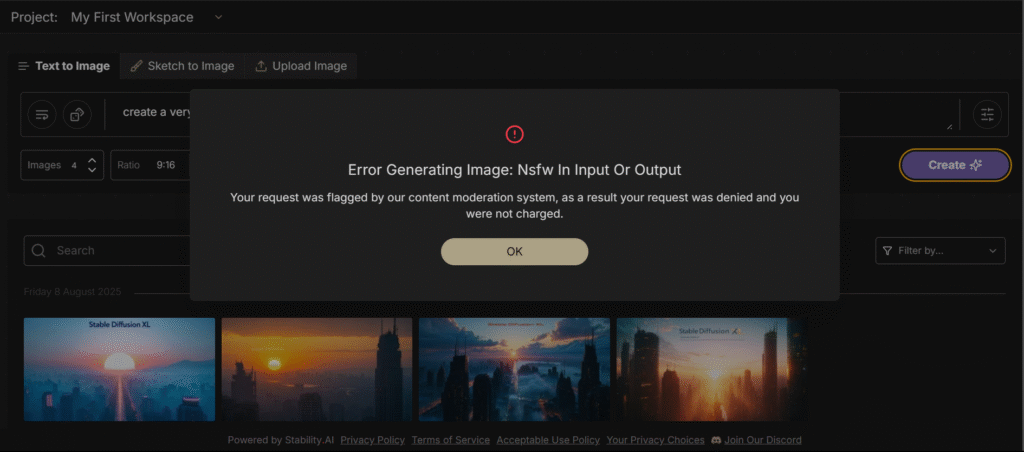

Illegal imagery prompt: on SDXL’s Stability Ai

One caution: because SDXL is open, people can generate anything, including unethical or illegal imagery. Stability AI has an “ethical use policy” and the major distributions come with a built-in NSFW filter (the model outputs a flag if content is adult, and many UIs auto-blur such results). But you can turn that off if running custom code. This means responsibility lies with the user. Companies worry about AI scraping might avoid SD if they fear legal uncertainties, but so far, using SD outputs is generally seen as safe – Stability even has “clear IP protections” and encourages responsible use. Also, since you can generate completely privately, ownership and privacy are strongest with Stable Diffusion – as an eWeek review put it, running locally means outputs aren’t accessible to others, bolstering ownership protection.

NSFW & Content Filtering: By default, Stable Diffusion (if you use the official weights and default config) will allow a wider range of content than Midjourney/DALL·E. The model was trained on a broad internet scrape (LAION dataset), which includes some not-safe-for-work material. The base SDXL model will produce nudity if prompted (tasteful or explicit, depending on prompt). Most public SD services still filter prompts and outputs to avoid abuse – e.g. Stability’s DreamStudio won’t let you generate hardcore porn or extreme gore, and will likely blur/flag images with sexual content. But unlike closed models, you have the option to use an unfiltered version or custom models for NSFW. There are entire communities around using SD for erotic art or other disallowed content – though obviously, be mindful of laws and ethics (no illegal content, etc.).

In short, Stable Diffusion offers the possibility of NSFW generation if that’s something you need (e.g. AI art for adult games or uncensored creative projects), whereas all other top tools outright ban it. Just know that the official stance of StabilityAI: they “actively ban misuse” and have moderation in their official tools. The responsibility is on the user when self-hosting.

When to use Stable Diffusion XL: Use SDXL when you need maximum control, customization, or privacy. If you want to own the whole stack (model and outputs) without relying on a third party or if your project demands a unique visual style you’re willing to train AI on, SD is the way. It’s great for developers integrating AI art into apps (since you can use the model locally or via API with flexible terms) – many AI image features in apps are SD under the hood. It’s also ideal if you have very specific style needs: e.g., “I need comic-book style images that exactly match this existing character design” – you can train SDXL to do that. Or if you need dozens of images and don’t want to pay a fortune or hit a rate limit, SD lets you generate at will.

However, if you’re not tech-savvy and just want pretty pictures quickly, SDXL can have a learning curve. The paradox of choice (so many models/settings) can be overwhelming. That’s why many casual users opt for Midjourney or Leonardo’s polished interface on top of SD. But those willing to invest time will find SDXL “stands out in reliable consistency, accessibility, and pricing” – as one comparison concluded, Stable Diffusion is highly reliable and accessible (runs anywhere, available to everyone) and effectively free or cheap, which are major pluses.

In summary, SDXL = freedom and flexibility. It’s a toolbox rather than a single tool: immensely powerful, but you’re the one swinging the hammer. For the hacker/designer who loves to fine-tune, SDXL in 2025 is a dream come true.

4. Leonardo AI – Best AI image generator for game assets and concept art

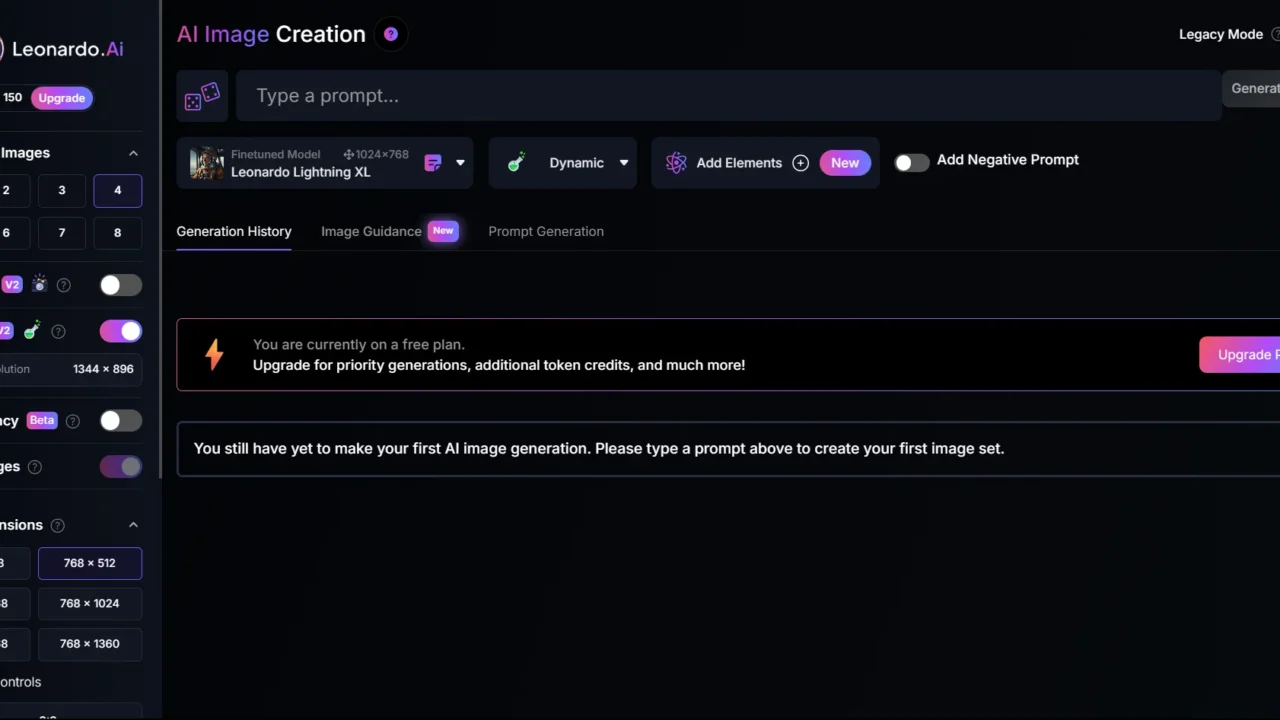

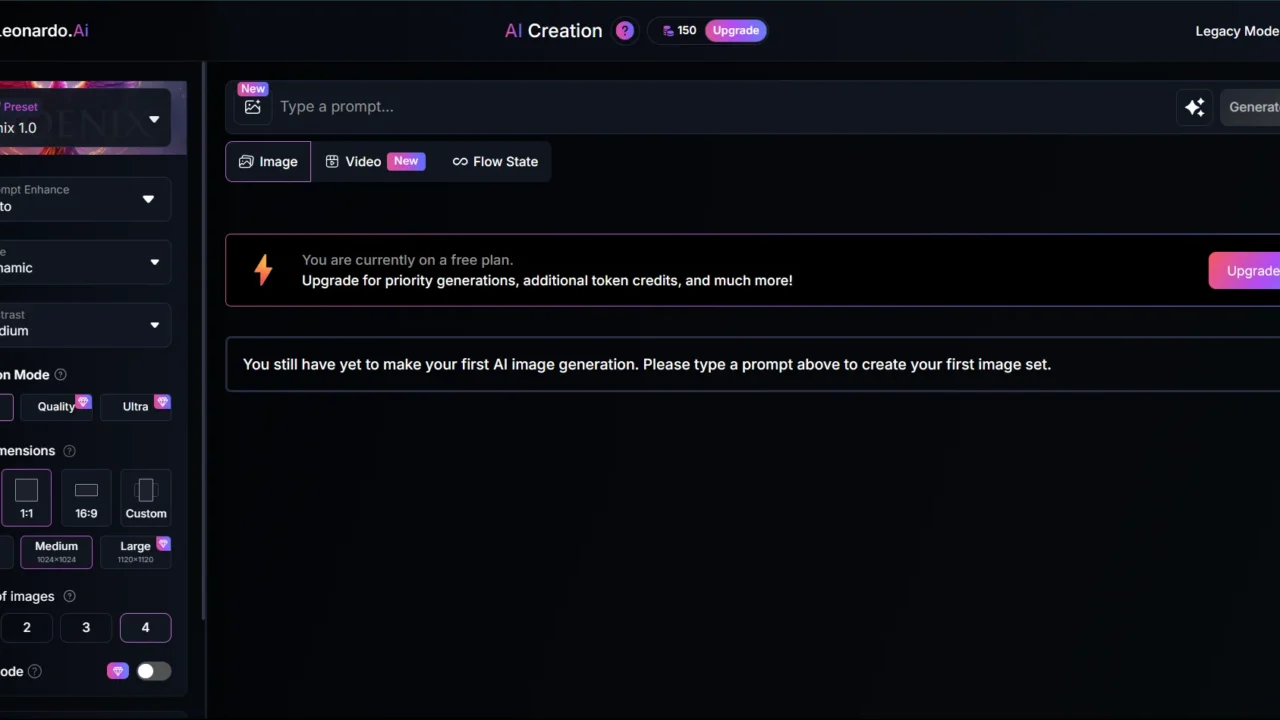

What it is: Leonardo.Ai is an all-in-one AI creative platform that leverages Stable Diffusion under the hood but wraps it in a user-friendly interface packed with features. Think of Leonardo as a hybrid of Midjourney’s image quality and Stable Diffusion’s customization, delivered in a polished web app. It gained popularity in 2023 by offering an accessible way to use various SD models and train your own, with a generous free tier that attracted many creators. By 2025, Leonardo has introduced its own models like Phoenix (their proprietary foundation model) and Leonardo Select models (like Lucid Origin for vibrant HD images). It’s basically a playground for AI art with tools for generation, training, and even some animation.

Leonardo Ai generated image preview

Image Realism & Quality: Leonardo can produce top-notch images, comparable to raw Stable Diffusion XL quality and sometimes nearing Midjourney-level depending on the model used. In fact, Leonardo’s team fine-tuned their Phoenix model to be highly photorealistic and consistent. It delivers “lifelike results” with detailed, realistic visuals suitable for concept art or marketing assets. The platform also boasts 100+ artistic styles and models you can choose from for different aesthetics – for instance, you might pick a “Cyberpunk City” preset model or style prompt to guide the output. This means you’re not stuck with one flavor; you can get cartoonish images one moment and architectural renders the next.

Users have noted that Leonardo’s default outputs are very good, but perhaps slightly behind Midjourney’s unique polish – in the sense that Midjourney might still handle complex prompts or lighting scenarios with more grace. Leonardo, using either SDXL or Phoenix, can occasionally produce inconsistent details that require a re-roll. However, the gap is narrow, and Leonardo is constantly improving its models. For example, their newly launched Lucid Origin model (Aug 2025) is Full HD and aims to raise the bar on diversity of subjects and coherence (less mode-collapse on faces, etc.). It’s safe to say Leonardo’s quality is excellent, and for many prompts you’d be hard-pressed to tell apart a Leonardo image from a Midjourney one. The advantage might even swing to Leonardo if you leverage its editing tools to fine-tune the output.

Prompt Control & Features: This is where Leonardo truly shines as a platform. It’s “built for creators who want a mix of speed, control, and high-quality images”, offering a robust set of tools:

- Real-Time Canvas Editor: A standout feature – you can sketch or import an image on a canvas, and then use AI to refine or auto-complete your drawing. For instance, draw a rough shape of a car and prompt “a red sports car,” and Leonardo will try to turn your sketch into that car, preserving your composition. This human-AI collaboration is fantastic for concept artists who want specific layouts.

- Edit Existing Images: Leonardo has an “Edit with AI” feature (akin to inpainting). You can upload an image, brush over an area, and prompt changes. Want to change your character’s outfit or remove an object? It’s doable in-app without Photoshop.

- Prompt Generation & Guidance: Leonardo includes prompt assistants and a community feed. You can see what prompts others used for their artworks and even use them. There’s inspiration galore.

- Multiple Models & Styles: As mentioned, Leonardo lets you pick from dozens of models or blend them. Their UI often abstracts it as styles or specific named models (like “Leonardo’s Signature” vs “Anime”, etc.). You can also import custom models or LoRAs. It essentially contains Stable Diffusion’s flexibility but in a friendlier package.

- Personal Model Training: Leonardo allows users to train their own “models” (actually LoRA fine-tunes) by uploading a set of images. For example, train on 20 images of your face, and then use the trained model to generate yourself as an “astronaut” in any scene. This was a big draw for free users especially (some limits apply, like free tier might have a wait or smaller training sets). It’s the Midjourney “/describe” and “variations” taken to the next level – full custom model creation, no coding needed.

- “Flow” and Variations: They introduced features like Flow State, where with one prompt it rapidly generates a stream of variations so you can pick your perfect image faster. It’s a bit like DALL·E’s conversation but in a visual rapid-fire mode.

- 3D model & Asset Generation: A newer feature – Leonardo can also generate 3D-like assets or even basic 3D models (in beta). E.g., it has a “3D texture generator” or can output depth maps for images. This is more experimental but shows Leonardo’s ambition to be a full creative suite.

All these give the user granular control when desired. Yet, the interface remains approachable – a novice can get started with default settings, while power users have advanced panels for fine adjustments. A review of Leonardo vs a simpler tool noted that “Leonardo.Ai is designed for those who want high-quality images with advanced customization options… precision and flexibility for artists”.

Speed: Leonardo is built for speed and volume. Its generation is fast, often just a few seconds per image on the paid plan. It also has features like “batch generation” where it will create, say, 8 images simultaneously (costing 8 credits) so you don’t have to manually hit generate multiple times. The web app might queue during peak times for free users, but generally it’s very responsive. They advertise real-time iteration, and indeed the canvas mode feels quite interactive – you doodle, hit a button, and within moments see the AI’s enhancement.

Under the hood, Leonardo likely uses optimized SDXL pipelines and possibly multi-GPU inference for faster outputs. It also might not always run full 30 steps if not needed, to save time (just speculation). The key point: Leonardo doesn’t feel slow. In comparative testing, users found it competitive with Midjourney and others in terms of getting results quickly, which is great given all the features layered on top. One community note: some advanced features (like training or heavy upscaling) may take longer or use more credits, but simple gens are snappy.

Cost: Leonardo operates on a credit-based pricing with a free tier. Free users get a daily allotment of credits (for example, 150 credits/day was a figure at one point, but currently it might be something like 50 or similar – it evolves). Each image generation might cost 1 credit, with upscales or certain models costing extra. This means free users can generate dozens of images per day at no cost, which is very generous. This was a big reason Leonardo got popular – you could use it extensively without pulling out your wallet, unlike Midjourney’s hard paywall.

Paid plans on Leonardo start around $10/month for a Pro subscription. The Pro plan gives you more credits (e.g. 500–1500 credits/month depending on level) and unlocks features: higher resolution outputs, private generations (no one else sees your images if you choose), faster queue priority, and training more personal models. They also offer pay-as-you-go options: e.g., you can buy extra credit packs, which is nice if you only occasionally need a burst of usage. There’s even an enterprise tier for teams with big needs.

Comparatively, if you’re a casual user, Leonardo’s free tier might suffice (none of the other top tools have a lasting free tier except limited DALL·E in Bing). If you’re a power user, $10 on Leonardo can go a long way (400+ images plus all the tools). Some cons to note: free tier images are public (in the community feed) by default and are subject to the content rules. Also, if you exhaust credits, you wait for daily refill or upgrade.

Licensing & Rights: Do you own the images? – Yes, generally Leonardo states you have rights to use what you create. A staff member on Reddit clarified “You have the rights to all images you create on the platform, and can use them commercially however you see fit.”. However, the free tier has a twist: Leonardo’s terms indicate that Leonardo retains ownership of images made by free users, while granting those users a broad license to use them. In other words, if you’re on the free plan, the images are public and Leonardo can reuse them (for training, marketing, etc.), but you can also use them for anything (just you can’t stop others/Leonardo from also using them since you don’t exclusively own it). If you subscribe, it appears the rights belong to you and your images can be kept private, so effectively you have full control. This structure is to incentivize paid plans for businesses who care about IP. That said, practically, even free users can commercially use their images – it’s just that theoretically someone else could generate a very similar image or Leonardo might showcase it. In 2025, Leonardo hasn’t been known to exploit user images beyond training improvements and community galleries, so it’s not a big worry for most. But a big company might opt for a paid plan to be safe.

NSFW & Filtering: Leonardo has strict content moderation by default. They “block NSFW image generation by default” on the API and platform. Certain trigger words (even surprisingly mild ones like “young” in some contexts) can cause a prompt to be flagged. They are quite cautious – e.g., anything that could imply underage content, or overtly sexual phrases, will be stopped. If an image is generated and considered slightly NSFW (say artistic nudity), it’ll typically appear blurred and require a user click to reveal (if you have NSFW visibility enabled in settings). On the user side, you can choose to allow viewing NSFW in your feed (so you see what others posted if they mark it NSFW), but generation of it is still mostly filtered. There have been attempts and community discussions about generating NSFW with Leonardo – some claim it’s possible with clever prompt wording or an unfiltered model slot, but officially it’s not allowed. The consensus: “if you want to generate adult content, Leonardo isn’t your platform. The filters are strict and deliberate.”. This matches Leonardo’s positioning as a professional tool (they don’t want to be known for facilitating explicit content). So like DALL·E and Midjourney, Leonardo keeps things SFW for the most part. Violence and gore are also limited – you can do fantasy battle scenes perhaps, but extreme gore might trip the filter. They also forbid using it for hate or illicit purposes and will ban violators.

When to use Leonardo AI: If you want the power of Stable Diffusion with the ease of Midjourney – Leonardo is your friend. It’s perfect for those who want customization (specific styles, personal models, editing) but don’t want to run code or juggle multiple tools. Designers working on concept art, game assets, marketing graphics, etc., find Leonardo super handy because it consolidates so many features: you can go from idea to final touched-up image in one platform. The generous free tier means students or hobbyists can create a lot without cost. And for small businesses, the commercial-friendly terms and ability to keep things private on a paid plan are reassuring.

Leonardo is also a great learning tool. If you’re new to prompt crafting, the community examples and the ability to tweak and re-generate quickly help you learn faster than maybe the rigid Midjourney prompt-output cycle.

One scenario: say you have a very particular character design in mind – with Leonardo you could sketch it, generate variants, fine-tune a model on the best look, then produce that character in various poses. This would be tough to achieve in other tools alone. Leonardo basically augments the creator rather than replacing them: you guide it heavily and it speeds up the grunt work.

In short, Leonardo AI is about control + convenience. It may lack a bit of the “surprise magic” of Midjourney’s secret sauce, but it makes up for it by letting you be the magician. And it’s constantly evolving – by 2025 it’s one of the most feature-rich AI image generators out there, yet still approachable.

5. Canva’s AI – Best AI image generator for quick marketing designs

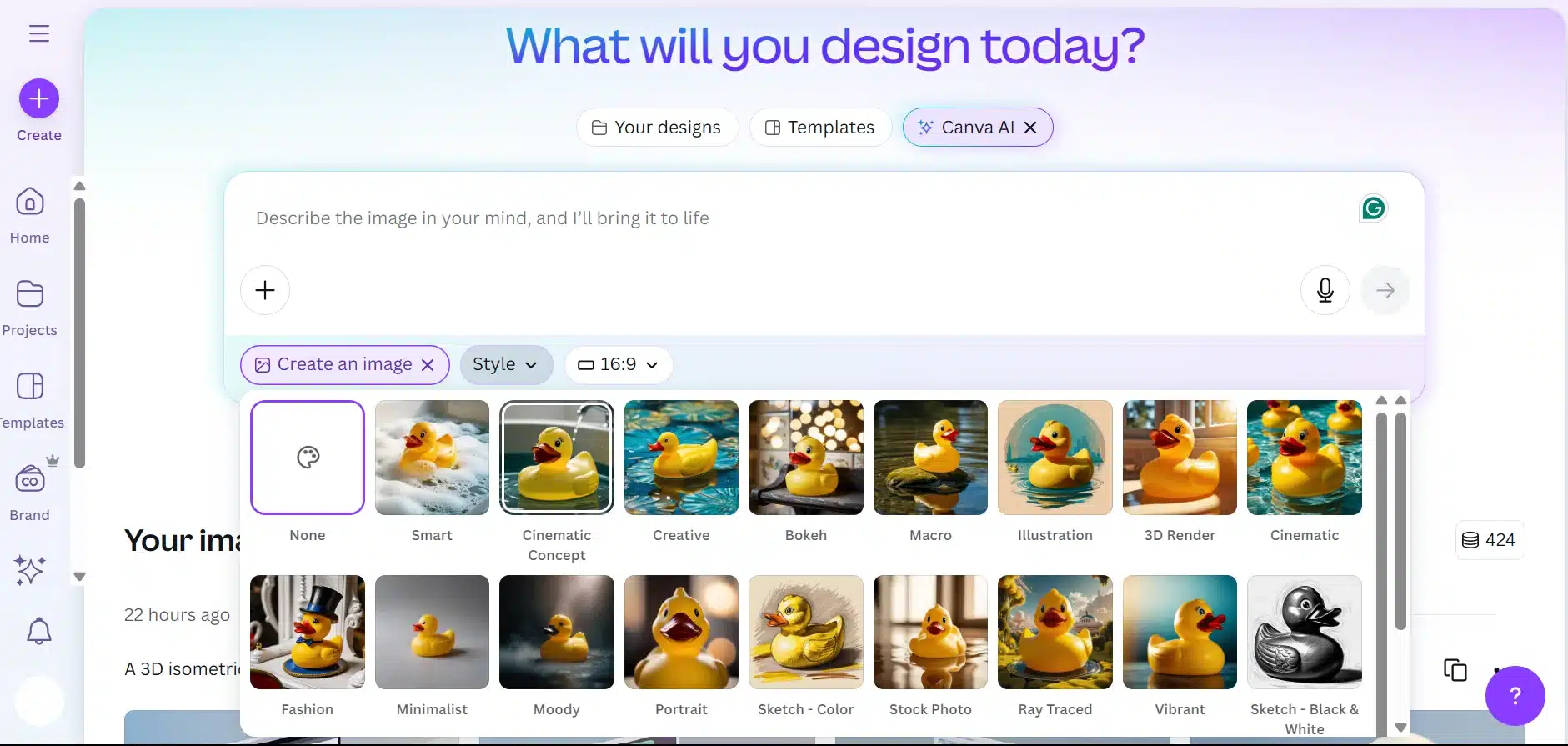

What it is: Canva is a massively popular online design tool (for creating social media posts, presentations, etc.), and it has integrated AI image generation (Magic Media) directly into its interface. Canva’s AI generator is powered on the back-end by models like Stable Diffusion and possibly DALL·E/Imagen for certain styles. The goal isn’t to be an art purist’s tool, but rather to help everyday people generate custom images as part of their design projects. By 2025, Canva’s AI image capabilities are robust: you can generate an image from text and immediately drop it into a flyer or video within Canva’s editor.

Canva Ai generated image preview

Image Realism & Quality: Canva’s image generator yields decent quality images, but not on the level of Midjourney or Leonardo for complex or ultra-realistic scenes. They tend to be good for “generic use” – e.g., a background texture, a simple icon or illustration, a concept image for a blog graphic. In head-to-head comparisons, Canva’s outputs might appear a bit more bland or have minor quirks (it sometimes creates inaccurate details that need manual fixing). This is partly because Canva likely uses a slightly older or heavily moderated model to ensure nothing problematic is produced. They also offer multiple “styles” when generating: for example, you might choose “Photo,” “Drawing,” “3D,” etc., which correspond to different model presets.

However, Canva has improved quality over time. Early on, it was using Stable Diffusion 1.5 (512px) which gave very average results. By 2024, they integrated SDXL and even OpenAI’s DALL·E via an app plugin. So if you use the OpenAI DALL·E app within Canva, you can actually get DALL·E 3 level images but directly into your Canva workspace. Similarly, a Google Imagen app was mentioned, perhaps for those with Google Cloud accounts. The default Magic Media likely uses SDXL or a derivative with Canva’s safety layers.

Prompt Control: Canva’s interface is extremely simple: you type a description and hit generate. It’s geared for novices. You don’t get advanced controls like weightings, negative prompts (explicitly), or model choices unless you use those separate apps (and even those are simplified). The upside: anyone can do it. The downside: power users might feel constrained. For instance, if the output isn’t what you hoped, you can try rewording the prompt or clicking “Generate again” for new variations, but you won’t have sliders or settings to tune. Canva typically gives you a few style options or examples to get better results, and that’s it.

A neat integration is that you can use any Canva template or layout and simply insert an “AI Image” element, describe what you need, and it appears right in your design. So the control Canva prioritizes is layout/design control, not the fine control of the image generation itself. You can always download an image and edit further in Photoshop or bring it to another generator, but within Canva, it’s one-shot generation.

One area of control Canva does address is prompt assist and example prompts to guide users (like suggesting “a watercolor painting of…” etc.), since many Canva users might not know how to phrase a good prompt.

Speed: Canva’s AI image generation is pretty fast. It often takes around 5-10 seconds to produce an image. Part of why it’s quick is likely because they constrain the complexity (maybe using fewer steps or smaller resolution, then upscaling). Also, it might cache common prompts. The objective is to keep the user’s design flow uninterrupted – you wouldn’t want to wait 1 minute while making a poster, right? So Canva likely opts for speed > absolute fidelity.

In practical terms, I’ve found it responsive: type something, and within moments 4 variation thumbnails pop in. If it’s slow, it might be because of high server load or if doing something like their beta video-to-image or such.

Cost: Canva’s AI image generator follows their overall model: Freemium. If you’re a free Canva user, you have a quota of AI generations (as of 2025, it’s about 50 images per month for free). If you have Canva Pro (around $5.99/mo or so for general design features), you get a larger quota, around 500 images per month included. These quotas are part of the “Magic Studio” features that also include AI text generation and more.

So effectively, if you already pay for Canva Pro for your design work, you now have a healthy allowance of AI images without extra cost. If you need more beyond that (say you want thousands of images), Canva might not be the cheapest route – you might hit limits. But 500 is a lot for typical design needs (that’s like 16 images a day, plenty for content creation pace).

Notably, if you generate images on a Pro account and then let your subscription lapse, those images you created are still yours to use (no retroactive issues). The free vs pro difference is partly about usage rights: Pro users explicitly get full commercial usage of outputs. Canva’s help docs state: “Between you and Canva, to the extent permitted by law, you own the images you create with Magic Media (AI)”, which implies even free users own them – however, the free account usage might be restricted by lower quality or watermarking. Actually, as of early 2024, free AI images in Canva are not watermarked or lower resolution, just you can’t make too many. So effectively they give a taste for free, but serious use requires Pro.

Licensing & Rights: Canva is big on making things safe for commercial use. They’ve said that users own the images they create (as mentioned). This means if you generate an image of a “purple cat logo” in Canva, you can legally use it in your business logo, merchandise, etc. No one else (including Canva) will claim rights. Canva’s content license covers AI outputs similarly to stock content: Pro subscribers especially have broad usage rights.

They do ask that if you publish designs with AI images, you label that it’s AI-generated somewhere. This is more an ethical guideline (and perhaps to comply with any emerging AI transparency laws). It’s not heavily enforced, but Canva being a brand conscious company, encourages good practice.

Also important: Canva’s AI was trained on open content (like SD’s training set or OpenAI’s which excludes certain copyrighted references). They also disallow using prompts of specific living artists or private figures. So theoretically, outputs are less likely to infringe copyright. And Canva provides indemnity similar to DALL·E for enterprise – they ensure that content in their library (including AI outputs) won’t get you sued, or they’ll help if it does. (This is part of their big selling point to businesses – everything in Canva is properly licensed or user-provided.)

NSFW & Filtering: Canva has one of the strictest filters because their user base includes schools, corporates, etc. They flat-out ban nudity, violence, illicit drug depiction, hate symbols, etc. in AI generation. Their AI terms say “be a good human” and not to create harmful content. The interface will refuse or sanitize prompts that seem to request disallowed content. E.g., if you try “sexy woman” it might give a tamer fully-clothed result or just an error. Canva’s audience often includes kids (education accounts) and non-technical folks, so they err on the side of caution.

In short, expect no NSFW or edge-case images from Canva. It’s intended to generate family-friendly and professional graphics. If something borderline does slip through, they likely update filters quickly. Additionally, they have community standards; if someone made an inappropriate image and posted it (though most Canva designs aren’t public unless shared), they’d likely revoke that.

One nuance: because you can also use DALL·E via Canva (as a plugin app), the filtering for those outputs follows OpenAI’s rules – which are also very strict, so it’s consistent.

When to use Canva’s AI Image Generator: If you’re already using Canva to design something and you need a quick custom image to slot in, it’s perfect. For example, making a presentation and you want an illustration of “a team achieving success” – instead of hunting stock photos, you just generate it in Canva, stylized to match your slide theme. It’s great for social media posts where you need some original imagery but nothing too crazy.

Because it’s integrated, it saves time: no downloading from one tool and uploading to another. Also, if you’re not very familiar with stand-alone AI art tools, Canva provides a comfortable environment to dip your toes. Marketers, small business owners, teachers, bloggers – these are people who benefit most. The trending use-case has been creating unique backgrounds, replacing generic stock photos with AI-customized ones (to avoid that “stock photo” look), or visualizing something that’s hard to find in stock libraries.

However, Canva is not the choice if you want art for art’s sake or ultra high fidelity. It doesn’t give you 4 variations like Midjourney; it gives one at a time (though you can regenerate multiple times). It also has resolution limits (usually outputs at 1024px or similar). So for a photographer-like quality print, look elsewhere. But for web graphics and quick design usage, it’s convenient.

Also, Canva’s AI might incorporate design context in the future (e.g. generate an image that matches my brand colors). They’re headed toward generative design. Already they have Magic Design that auto-makes layouts, etc. So the image gen is one part of a bigger design AI toolkit. If you want an end-to-end design with minimal effort – say an Instagram quote image with an AI-generated background and AI-chosen fonts – Canva’s your pick.

In summary, Canva’s AI generator is about accessibility and integration. It’s not the most powerful or flexible, but it’s reliable, safe, and handy within the Canva ecosystem. Consider it the friendly AI sidekick for your everyday design tasks.

6. Runway ML – Best AI image generator for AI video creation

What it is: Runway ML started as a creative AI toolkit for artists and filmmakers, and it famously co-created the original Stable Diffusion. In 2025, Runway is best known for its text-to-video models (Gen-2) and advanced video editing capabilities, but it also offers image generation models and editing as part of its suite. Essentially, Runway aims to be a one-stop shop for generative media – images, videos, and beyond – with an easy UI. Think of Runway as the platform you’d use if you’re making a short film or an ad: you can generate concept art, storyboards, do background removal, apply AI effects, and even generate footage.

Runway ML Ai generated image preview

Image Realism & Quality: Runway’s image generation historically used Stable Diffusion as a base (they hosted a custom SD1.5 model in 2022 for users). By 2024, they introduced their own image model called “Frames” which touted “unprecedented stylistic control”. Reviews of Frames were mixed: some said it wasn’t much beyond what SD had already, but it does allow easy style customizations akin to using LoRAs. The quality is good – “Excellent” image quality according to an eWeek comparison – but Midjourney was still rated “Superior” in that match-up. This implies Runway’s images are high-grade and usable, but perhaps slightly less consistently mind-blowing than Midjourney’s.

In practice, images from Runway Gen-2/Frames can look great, especially if you leverage their style presets (like replicating a certain film look or artist style). But occasionally, as noted by users, they lack a bit of depth or complexity compared to Midjourney’s results. This might mean backgrounds that are flatter, or less intricate minor details. Runway’s focus is partly on making images that can integrate into video or design workflows, so they may prioritize coherence (no weird artifacts) over flamboyant detail.

One thing Runway does well: coherence across multiple images. If you generate a series (like storyboards), Runway can maintain style consistency, which is useful for narrative work.

Prompt Control & Features: Runway’s interface is intuitive (drag-and-drop, sliders, etc.), and they provide a lot of tools around the image generator:

- Inpainting/Masking: You can generate an image, then mask part and replace it with something else via prompt (like DALL·E’s inpainting). For example, if the generated scene is great but you don’t like the car in it, you can mask the car and say “a bicycle” and Runway will swap it. This is integrated in their editor (since they already had a rotoscoping tool for video, they extend that to image).

- Image-to-Image: You can give an input image to guide the generation (for consistency or specific composition).

- Stylistic Control: The new Frames model boasted fine style control; indeed, in Runway you can apply style “presets” easily (like “3D render,” “analog film,” etc.), essentially behind the scenes it applies embeddings or adjusts prompt weights. They aimed for “unprecedented stylistic control” with Frames, likely referring to how you can import a reference style image and have the output mimic it (similar to LoRA usage).

- Multi-modal interplay: Because Runway does video, you can generate an image then use their “image-to-video” to animate it slightly or integrate images into a video timeline. For instance, generate keyframes as images, then morph them into a video sequence. This multi-step pipeline is something unique to Runway among these platforms.

- Polishing and Effects: After generating an image, you can use Runway’s other AI tools on it – like apply Gen-1 (video filter) to add motion, or use their color correction AI, etc. They treat images and video interchangeably in some tool contexts.

- Collaboration: Runway is a professional tool, so you can have team projects where multiple people generate/edit images within a project and keep a consistent style. Not a prompt control per se, but a workflow enhancement.

Prompt-wise, Runway aims to be as easy as typing a description. It lacks a prompt wizard like ChatGPT, but it does emphasize simple language. Some advanced SD prompt features (like negative prompts) might be present under the hood or via a hidden field, but average users won’t need them; they just describe what they need.

One caveat: an eWeek review noted “Runway’s prompt fidelity can be limited compared to Midjourney – sometimes requiring extra adjustments or tries to get the exact desired output”. This suggests that while Runway is easy to use, it might not parse extremely complex prompts as perfectly as something like GPT-4 guided DALL·E or even Midjourney’s specialized model. If the output isn’t right, you might need to rephrase or do manual edits. They compensate with editing tools though, so you can fix it after generation.

Speed: Runway is fast and optimized for workflow. eWeek’s verdict was that Runway “excels in ease of use” and one bullet says “capable of high-speed renders” for Midjourney, but likely Runway is similar or better given its cloud infrastructure. In practical terms, generating a single image in Runway’s web app takes on the order of seconds (maybe 5-10 seconds). They have to be mindful of speed because they offer real-time editing – if it took minutes, it’d break creative flow.

For video tasks, Runway obviously takes longer (generating each frame etc.), but for images, it feels nearly instantaneous on a good connection. They likely allocate a strong GPU per user task (hence their costs, which we’ll discuss). They might not generate 4 at once by default like Midjourney; possibly it’s one image per credit unless you request multiples.

Cost: Runway is a subscription service, with a free tier that is quite limited (some credits to try, watermark on outputs, limited resolution). Paid plans range roughly from $15/month up to $75/month depending on usage needs. For example, the Starter plan might be around $15/mo giving X credits (where credits convert to compute time), Creator plan around $35/mo for more, and Pro $75 for heavy use. These prices and tiers change, but eWeek listed Free to $76/mo and highlighted that Runway uses a credit system across tiers.

So, with Runway you pay for compute credits which you spend on image generations, video renders, etc. The free version exists (e.g., 125 credits one-time, which equated to ~25 image gens, and then maybe a few per day). But unlike Leonardo’s generous free daily allotment, Runway’s free is more of a trial. They don’t want freeloaders given the expensive video capabilities.

The credit system can be a bit confusing – different actions use different amounts. High-res image or longer video = more credits. Unused credits expire monthly on subscription, which some see as a downside. But the intention is you pick a plan that suits your monthly usage.

For images specifically, if you only care about images and not video, paying $15 on Leonardo vs $15 on Runway: Leonardo will likely give far more images for that money. Runway’s value is in the combined media tools. So, it’s not the cheapest if you only want images. But it’s fairly priced for what it offers overall.

On commercial rights: Runway’s terms say “Yes, content you create using Runway is yours to use without any non-commercial restrictions”. This indicates you have full commercial rights as a user. They likely just require you not to infringe others or create disallowed content. They also mention they might review inputs/outputs for policy compliance – presumably automated moderation.

NSFW & Filtering: Runway is strict as well. As a venture-backed company working with enterprise, they do not want to be an NSFW haven. They likely use similar filters to Stable Diffusion’s default and their own content policy. Also, Apple’s App Store once rejected an older version of Runway’s mobile app for not filtering enough – so they double down now.

They specifically disallow things like sexual content, violence, harassment, illegal stuff, etc., in their usage policy. People have noted “Runway is extremely strict about content”, particularly citing no nudity at all. They do allow maybe some artistic nudity with blur (for research, they had an option to turn off filter for research accounts earlier, but not for normal users).

Since Runway is also about video generation, imagine the potential bad press if it was used for deepfake porn – they clearly want to avoid that. So, consider Runway’s environment similar to DALL·E’s in terms of filtering. If you attempt NSFW, you’ll likely get a flag or ban. Better to not try. They also likely block political figure generation (to avoid deepfakes).

When to use Runway ML: If you are a content creator working with both images and video, or if you want an easy interface to generate images and then do more with them (animate, composite, etc.), Runway is unmatched. For example, a filmmaker/storyteller might use Runway to generate concept art and storyboards (images), then use those to create an animatic or actual AI-generated video scenes. Runway streamlines that multi-step process in one place.

It’s also great for designers who want to integrate AI into their existing video/image workflow. Suppose you’re making a short marketing video: you can generate a background image with Runway, use their green screen tool to remove background from a subject, then composite those in a video, then generate additional frames, etc. All inside Runway’s platform – that’s powerful.

For strictly image-centric users, Runway is nice but maybe not necessary. If you don’t touch video, you might get similar image results from Leonardo or SDXL with less cost. But one might still choose Runway if they love the UI and community. There’s a social aspect – though less so than MJ’s community – but they do share user success stories and have an inspiration gallery.

In terms of trends, Runway has been at the forefront, e.g., Gen-2 video was trending mid-2023, and by 2025 maybe Gen-3 (maybe integrating longer coherence or sound, etc.). Using Runway keeps you in touch with those trends. Many “AI film festival” entries, music videos, and experimental art pieces credit Runway’s tools.

To sum up, Runway ML is for the cutting-edge creative who wants a Swiss Army knife of generative AI. You sacrifice a bit of image-generation specialization (Midjourney might produce a slightly better single image of a fantasy scene), but you gain an arsenal of tools to modify and utilize that image in bigger projects. It’s a trade-off between ultimate single-task performance and versatility. Runway chooses versatility in a very user-friendly way, which is why it’s highly regarded among creative professionals.

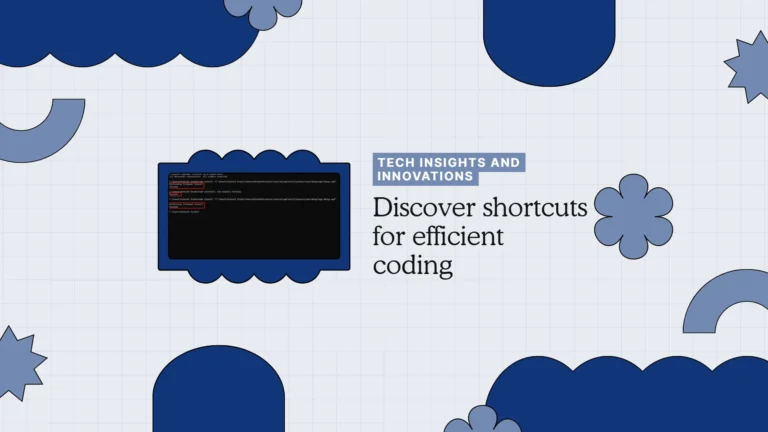

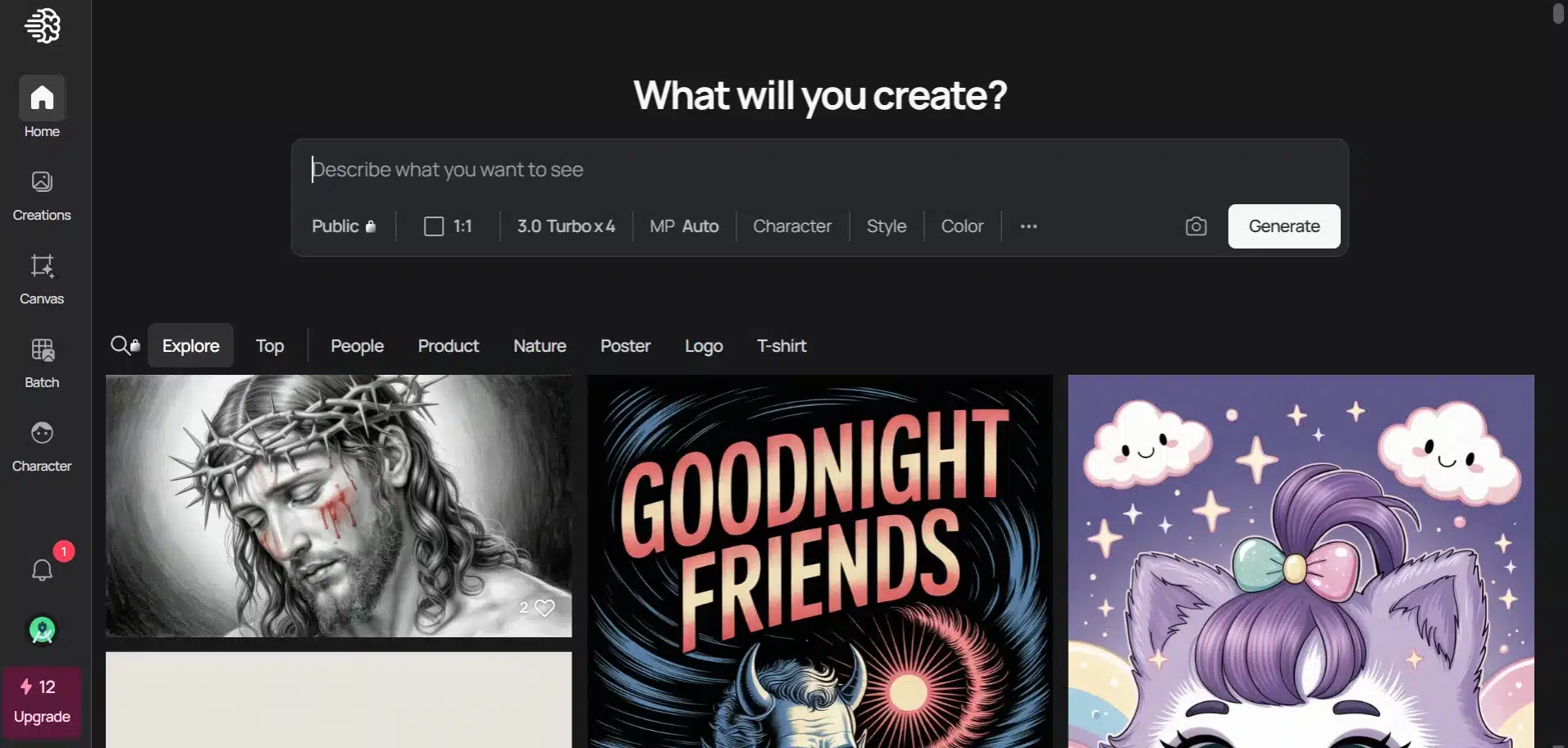

7. Ideogram – Best AI image generator for text in images

What it is: Ideogram is a newcomer (launched in late 2023) focused on solving a notorious limitation of generative image models: rendering text accurately within images. It’s an AI image generator built specifically to integrate typography and lettering coherently into the generated art. The team behind it includes former Google Brain researchers, and they created a custom model (often just referred to as Ideogram 1.0, 2.0, 3.0, etc.) separate from Stable Diffusion or DALL·E, optimized for “text fidelity.” In other words, Ideogram can produce an image of a billboard that actually shows the slogan you typed, or a wedding invitation with the names clearly written, etc., which historically was nearly impossible with other models.

Ideogram Ai generated image preview

Image Realism & Quality: Ideogram’s image quality is excellent – on par with high-end models in many cases. TechRadar’s review gave it a resounding thumbs up: “Great image quality … 2K resolution… text integration is excellent”. Its photorealistic outputs are sometimes jaw-dropping; in fact, some users found Ideogram 3.0 to be among the most photorealistic generators, rivaling Midjourney, especially for scenes that involve signs, logos, or any written element. It handles complex prompts and blends styles nicely, presumably because it was trained on a diverse dataset (likely akin to SDXL but with enhanced text encodings).

Anecdotally, Ideogram’s compositions (how it frames a scene) are creative and it often nails the “intended design” look. For instance, if you prompt “a vintage poster with the words Café Paris in Art Nouveau style,” Ideogram will produce a very convincing vintage poster, complete with elegant lettering spelling “Café Paris” clearly – something Midjourney might produce as gibberish stylized text. This opens “whole new design areas such as logos, memes or graphics with captions that were off limits for other AI platforms”. Indeed, Ideogram is popular for making meme templates and signage.

Now, is Ideogram always as consistent in general art as Midjourney? It’s extremely good, but Midjourney still might have an edge in some purely artistic or abstract scenes where text isn’t involved. Ideogram’s specialization is text, so that’s where it truly shines. The good news is the specialization didn’t come at a big cost to overall quality – it still yields “gorgeous high resolution images” with or without text. It tends to prefer a clean aesthetic (perhaps due to focusing on clarity for text), so sometimes images are a tad less cluttered or overly intricate than Midjourney’s. But you can always prompt for more detail if needed.

Prompt Control & Features: Using Ideogram is straightforward: you give a prompt, and if you include text in quotes or brackets, it knows you want that text rendered in the image. For example: a storefront sign that says "Bakery Bliss", photo – it will attempt to put “Bakery Bliss” exactly on the sign. This explicit text handling is unique. In other models, quotes don’t guarantee verbatim text; in Ideogram, they often do (within reason – short texts work best). It supports multiple text areas sometimes (like a poster with title and subtitle) but you have to experiment.

Other control features:

- Styles: Ideogram (especially version 3.0) introduced more style control and photorealism focus. You might not have as many preset styles as Midjourney’s “niji, etc.” but you can prompt in certain styles and it follows well. They likely fine-tuned the model for certain style prompts (e.g. “oil painting” or “3D render” triggers learned style).

- “Describe” tool: There’s a handy Describe feature (like Midjourney’s /describe) where you can upload an image and Ideogram suggests a prompt to create similar images. This helps new users learn effective prompts.

- Community prompts & Remix: The Ideogram web app has a feed of public creations. You can click on any and “remix” it – essentially use the same prompt or tweak it. This is great to see how others prompt and to iterate. TechRadar noted how Ideogram gates browsing unless logged in (to encourage signups), but once in, you can leverage community prompts.

- Magic Prompt (AI prompt enhancer): They have a feature where you give a basic prompt and the AI expands it to a more detailed one (called the ‘Describe’ which works in reverse too). TechRadar described an example: a simple prompt about a dog’s head was upgraded by Ideogram’s magic feature to a detailed descriptive prompt, yielding a much better image. This shows an “AI helping AI” feature to improve prompt quality, which is fantastic for novices who might under-describe a scene.

- Remix with text positions: You can actually draw boxes on an image to mark where text should go and then Ideogram can fill those in on regenerate. For example, generate a logo, then decide you want the text moved – you mark it and prompt again, it will adjust. This is an advanced feature for more precise design layout control. It’s like simple inpainting focused on text placement.

- No explicit training by user yet: Unlike Leonardo or SD, Ideogram doesn’t let you upload a set of images to train a personal model. It’s more like Midjourney – you rely on the global model. But you can use an image as reference by including it as part of prompt (perhaps via a hidden feature or API, not sure if publicly exposed).

Speed: Ideogram started off free and sometimes had queues when it went viral. But by late 2024, it’s generally fast. Expect ~10–15 seconds for 4 images (it generates 4 variants per prompt by default, like Midjourney). The outputs are at 1024 or even 2048px for paid users (which is great resolution but might take a smidge longer). TechRadar said slow generations on free took ~30-45 seconds, which isn’t too bad either. Paid plans likely have priority, making it faster.

One of Ideogram’s selling points in reviews is a “generous free plan” with tolerable speed, and a cheap paid plan with fast, high-quality generation. So they’ve optimized inference well. Possibly the model might be a bit smaller or more efficient than SDXL or Midjourney’s, enabling speedy response even at 2K resolution with good quality.

Cost: Ideogram launched completely free (unlimited) in beta, which was insane and unsustainable long-term. In 2024, they introduced tiers:

- Free Plan: ~20 slow generations per day. These images are 70% quality JPEGs (slightly compressed and maybe downscaled to e.g. 1536px or so). So free users get to play but not at full quality.

- Basic Plan ($8/mo): 400 prompts per month, with full quality 2K upscaling and 100% quality downloads. Also access to the basic editor (cropping, simple stuff).

- Plus, Pro Plans (~$16, $28 or so): More prompts (maybe 1500, 3000), ability to keep images private (so they won’t appear in public feed), uploading your own image to rework (img2img or variations), and special features like creating tileable patterns (which is great for making textures).

They intentionally set the price low ($8) for entry – significantly undercutting Midjourney and others – to draw users. And it’s been noted as “more budget-friendly and excels at embedded text” by reviewers.

Commercial rights: From what I gather and the way they operate, users own their images (with similar caveat as others that AI images can’t be copyrighted per current law). The free plan’s outputs are public domain (anyone can use, arguably), but if you care, you’d go private on a paid plan. There’s no evidence Ideogram tries to restrict usage; they encourage usage in creative projects.

NSFW & Filtering: Ideogram is definitely filtered. Reddit users noted “Ideogram blurs anything even slightly sexy”. Indeed, like others, they don’t want to become the go-to for porn generation. They likely share some codebase with Google’s ethics given the team’s background, and Google’s Imagen was strict. So expect no explicit nudity, hate symbols, etc. If you generate something borderline, the image might come out blurred or pixelated automatically by their system (some AIs do that – they produce a blurred output if NSFW content is detected in the result).

On the plus side, because it was free and public initially, the community policed content and they quickly tuned filters to avoid anything problematic on the public feed. You can scroll Ideogram’s explore page without fearing graphic surprises.

Thus, for PG-13 or professional use, Ideogram is fine; if you attempt something NSFW, you’ll be disappointed or even banned.

When to use Ideogram: If your image needs text (labels, signs, logos, captions) that you want to actually read, Ideogram is the best choice. For example:

- Designing a logo or word art (e.g. a cool wordmark with stylized font) – Ideogram will generate unique typography and art combined.

- Creating memes – you can literally prompt “A two-panel meme: first panel text ‘X’, second panel text ‘Y’, with images of …” and Ideogram will do it, text and all, correctly. Meme-makers loved this capability.

- Creating marketing images with taglines, or social media posts that include the message within the image.

- Any scenario where mixing graphics and text is needed: book covers, posters, product packaging concepts, infographics, comics speech bubbles, etc. Traditional models flounder here; Ideogram handles it.

Beyond text, Ideogram is also just a very strong general image model. So you might use it even for normal art if you find its style aligns with you. Some people prefer Ideogram’s outputs for photorealism or certain aesthetics over Midjourney – especially since Ideogram doesn’t have the sometimes over-stylization of MJ. And the high resolution output is a perk for printing or detailed work (2K vs Midjourney’s typical ~1K base).

Another factor: cost and access. Ideogram’s free tier and low cost means students, hobbyists, or anyone on a budget can use a near top-tier model without paying much. That can be a deciding factor. Also, it doesn’t require any special app or Discord – just a web login and you’re in.

Trends-wise, Ideogram addresses the trending demand for AI-assisted graphic design and not just “AI art.” In 2025, more people want to create flyers, T-shirt designs, website graphics with AI. Ideogram is what’s trending now for that domain, because it broke the barrier of AI not being able to produce legible text on those designs. As Zapier noted, “early generative AI was mostly anime avatars and fantasy art, but now tools like Ideogram open use cases for branding and marketing where text is needed.” That’s a big trend.

In summary, Ideogram = AI image generation that finally speaks your language (literally). It should be your go-to when visual communication is required, not just visuals.

Having reviewed each platform in depth, let’s consolidate these findings into a feature comparison table for a clear side-by-side overview.

Comparison Table – 2025’s Best AI Image Generators

The table below sums up key aspects of each platform: the model/tech underpinning it, how you access it (UI), how much you can customize outputs, what the commercial use terms are, typical output resolution, and NSFW/content policy.

| Tool & Model | Interface & Access | Customization & Control | Commercial Use Rights | Max Image Resolution | NSFW Policy |

|---|---|---|---|---|---|

| Midjourney (v6) – Proprietary Diffusion model (Midjourney lab) | Discord bot or Web app (membership required). Commands and parameters for prompts. | Moderate – Many style presets, quality modes, and prompt weights; but no user model training. Allows reference images & aspect ratio tweaks. | Yes – Paid subscribers own outputs (even commercially). No royalties. (Big companies >$1M revenue need Pro plan) | ~1024×1024 by default (upscales ~1536px). High-res upscaling available (~2×). | Strictly filtered – No explicit nudity, gore, or hateful imagery. Violations can lead to bans. |