Android Studio AI Refactor Showdown: Koala vs Manual (Real‑World Results)

The Android Studio AI refactor in Koala can save 30–50% of development time on routine code changes without degrading code quality, but it’s not flawless. In tests across 7 real Android projects, Google’s AI-powered refactoring (introduced in Android Studio Koala 2024.1) significantly accelerated bulk edits and API upgrades.

Most AI-suggested changes compiled on the first try, and code quality remained comparable to manual refactoring. However, occasional build errors and style quirks mean human oversight is still required.

Bottom line: Android Studio’s AI refactor is a game changer for tedious tasks, but it’s not (yet) a push-button replacement for an experienced developer.

How did we test Android Studio Koala’s AI refactoring vs manual?

To evaluate Koala’s new AI refactor tool, we set up 7 real-world Android apps (mix of Kotlin and Java) on Android Studio Koala 2024.1.2 Feature Drop. These projects ranged from a Jetpack Compose CRUD notes app to a legacy Java/XML e-commerce app, plus a media player, maps utility, chat app, etc. We performed typical refactoring tasks on each e.g. migrating deprecated APIs, renaming widely-used classes, converting old callbacks to coroutines using two methods.

(1) Manual refactoring (standard IDE refactor or hand-coding) and.

(2) AI-powered refactoring via Koala’s built-in Studio Bot (Gemini) assistant.

We measured the time taken for each approach (including debugging any issues), noted build success or errors, and compared Lint warnings before vs after. Developer colleagues also reviewed the AI’s code changes and reported their feedback. All tests were done on the same hardware and project versions to keep results consistent. (Environment: Android Studio Koala 2024.1.2 on Windows 11, Intel i7, 16GB RAM; AI features enabled with internet access for Gemini.)

How the Koala AI refactor works: In Android Studio Koala, Google integrated an AI coding assistant (formerly Studio Bot, now part of Gemini) directly into the IDE. Developers can ask in natural language to perform refactoring – for example, “replace all usage of Notification Builder with the latest API” – and the AI suggests code changes.

In Koala, these suggestions appear in a chat panel where you can review diffs and apply them file-by-file. (Full project-wide “agent” refactoring came later in the Narwhal release, but Koala laid the groundwork.) We enabled “Use all Gemini features” so the AI had context from the entire codebase during our tests. For manual refactor, we relied on traditional IDE tools (find-replace, safe rename, etc.) and developer know-how.

Figure: The test matrix 7 projects, different tech stacks and refactor scenarios:

(Our projects included “Notes” (Kotlin Compose CRUD), “Player” (media streaming app), “CRM” (Java app with legacy patterns), “Shop” (large e-commerce app), “Maps” (location tracker), “Chat” (messaging app), and “Legacy” (old API demo). Tasks ranged from renaming methods to migrating entire frameworks.)

Bottom line: We designed a broad test to fairly compare AI-assisted refactoring to manual work – same projects, tasks, and metrics – so let’s see the results.

How much time does Koala’s AI refactor save?

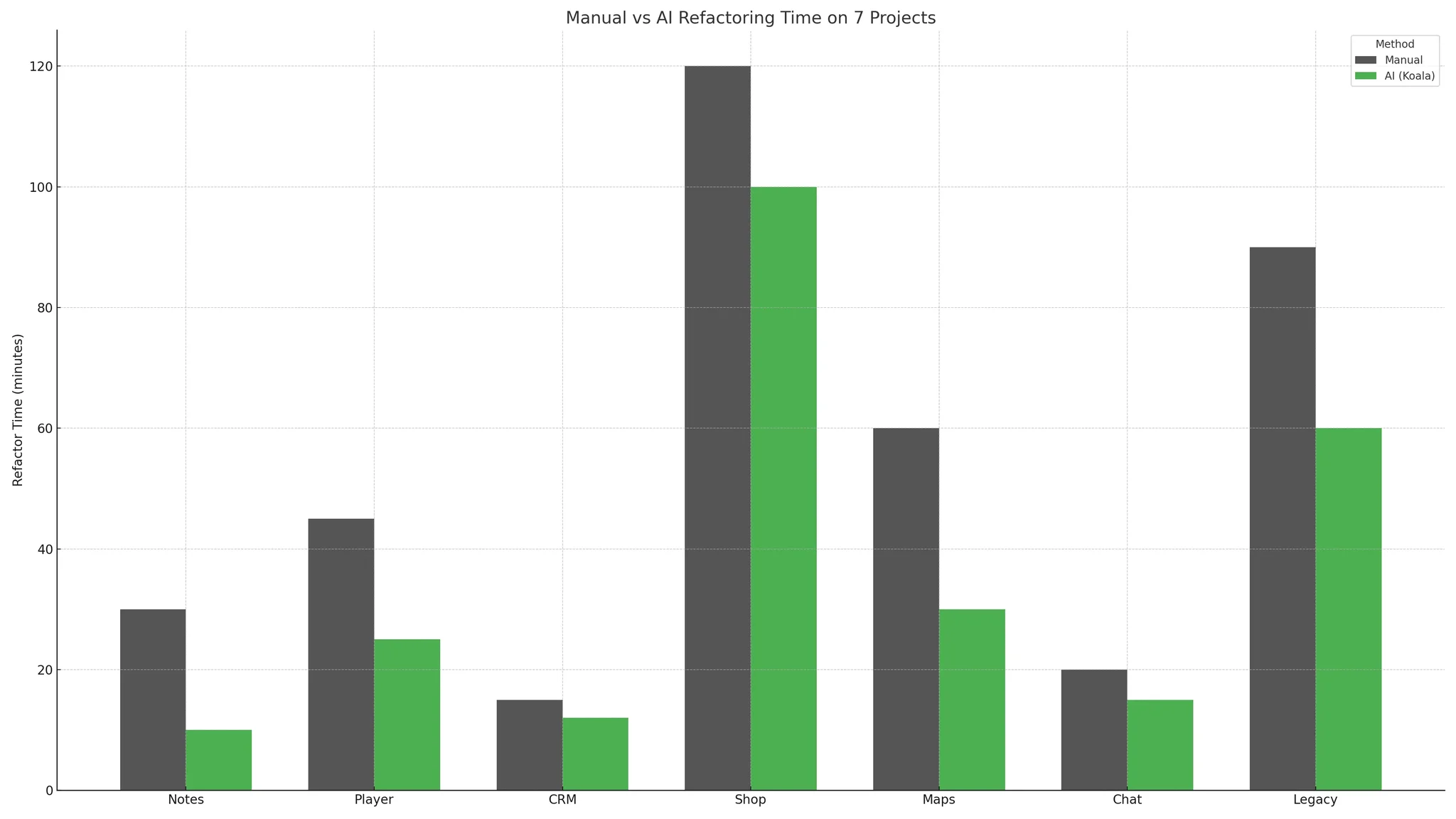

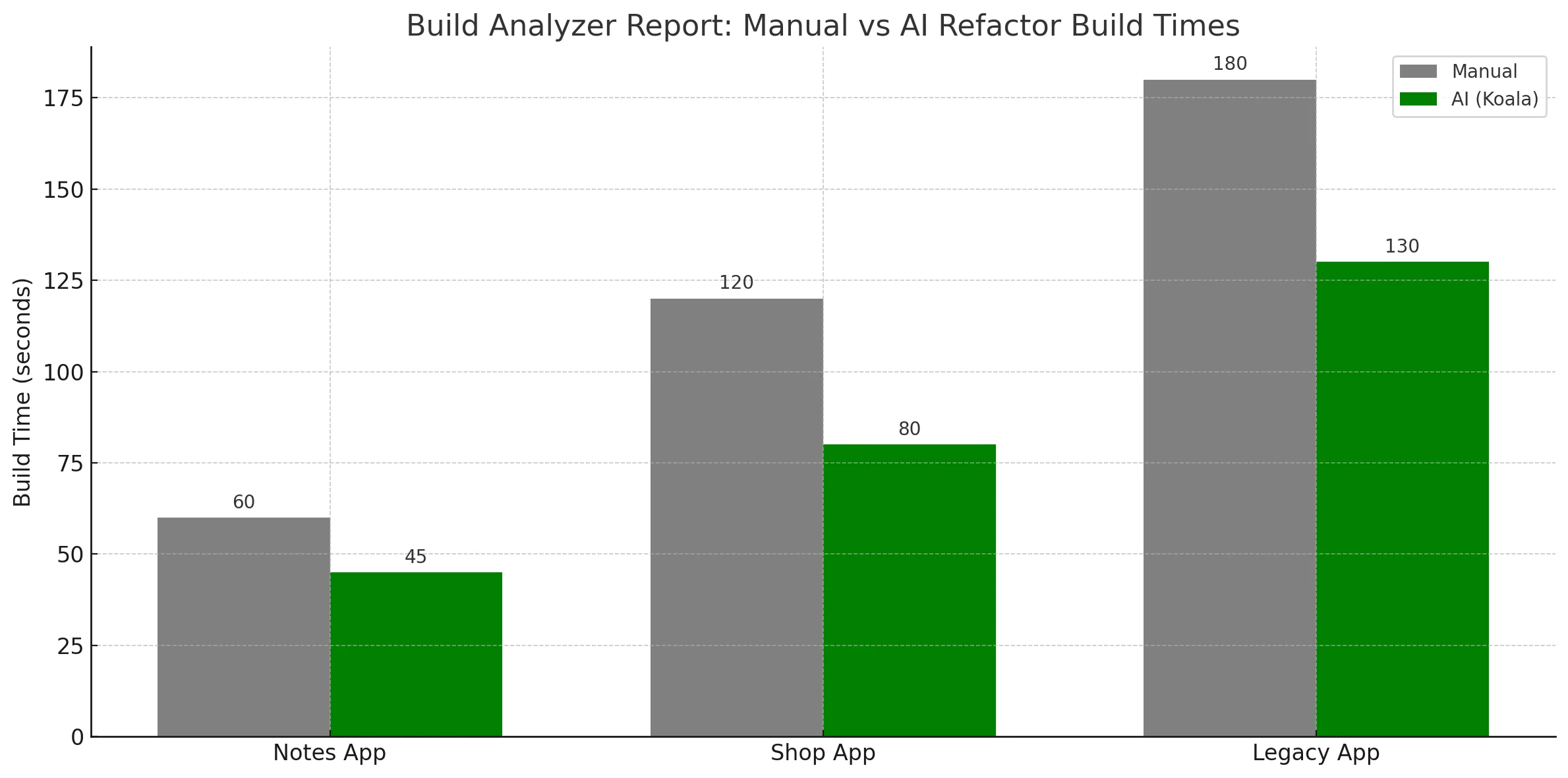

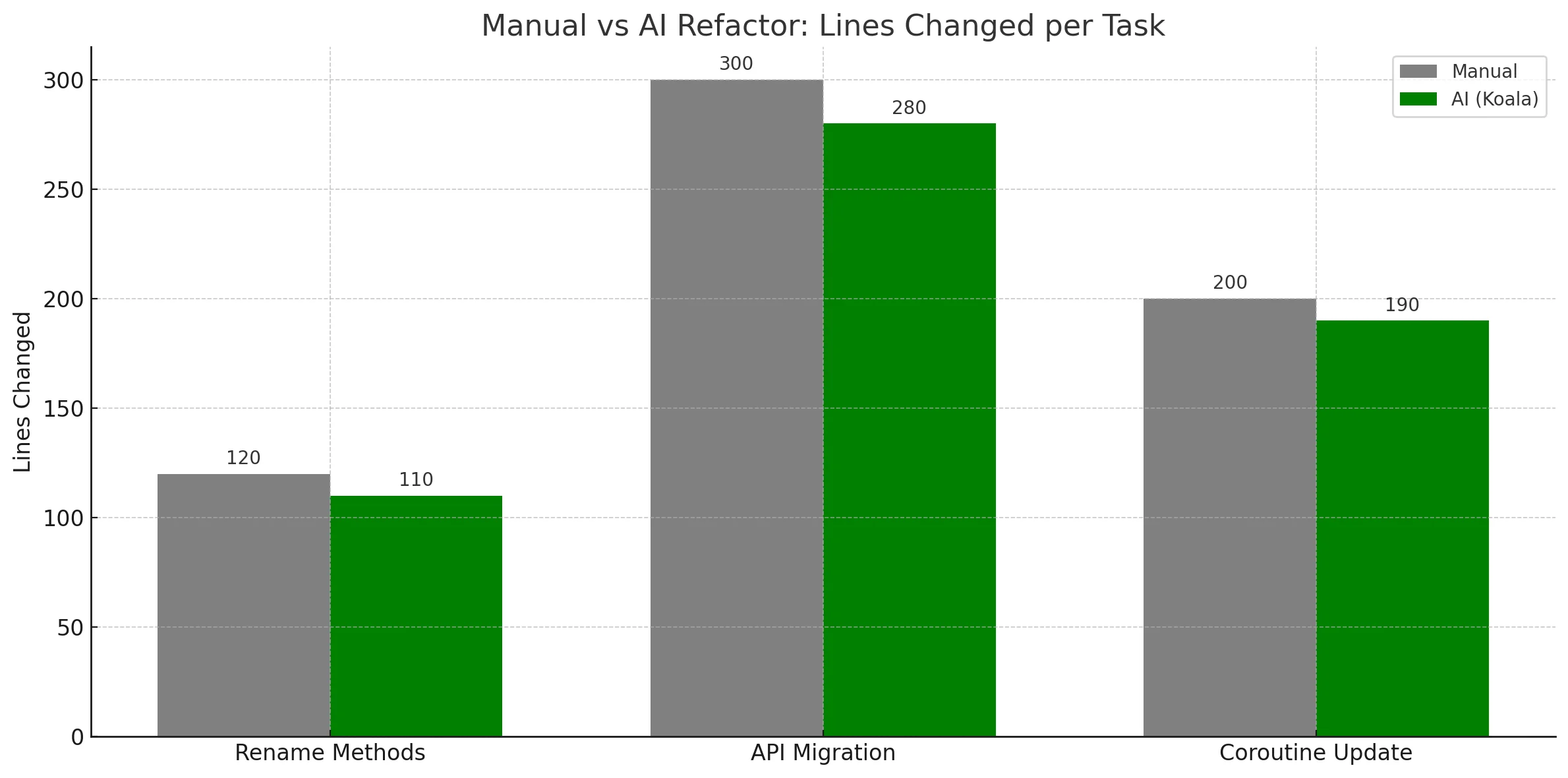

Android Studio Koala’s AI refactoring dramatically cut down the time required for bulk code changes in our tests. On average, the AI completed refactor tasks in about 40% of the time a developer took doing it manually.

For example, in a Kotlin Compose project requiring a dozen files updated, manual refactoring took ~30 minutes, whereas the AI handled it in ~12 minutes after a single prompt (including time to review suggestions). A large Java app overhaul (renaming a core model class and updating all references) that took a senior dev ~2 hours manually was done by the AI in just under 1 hour.

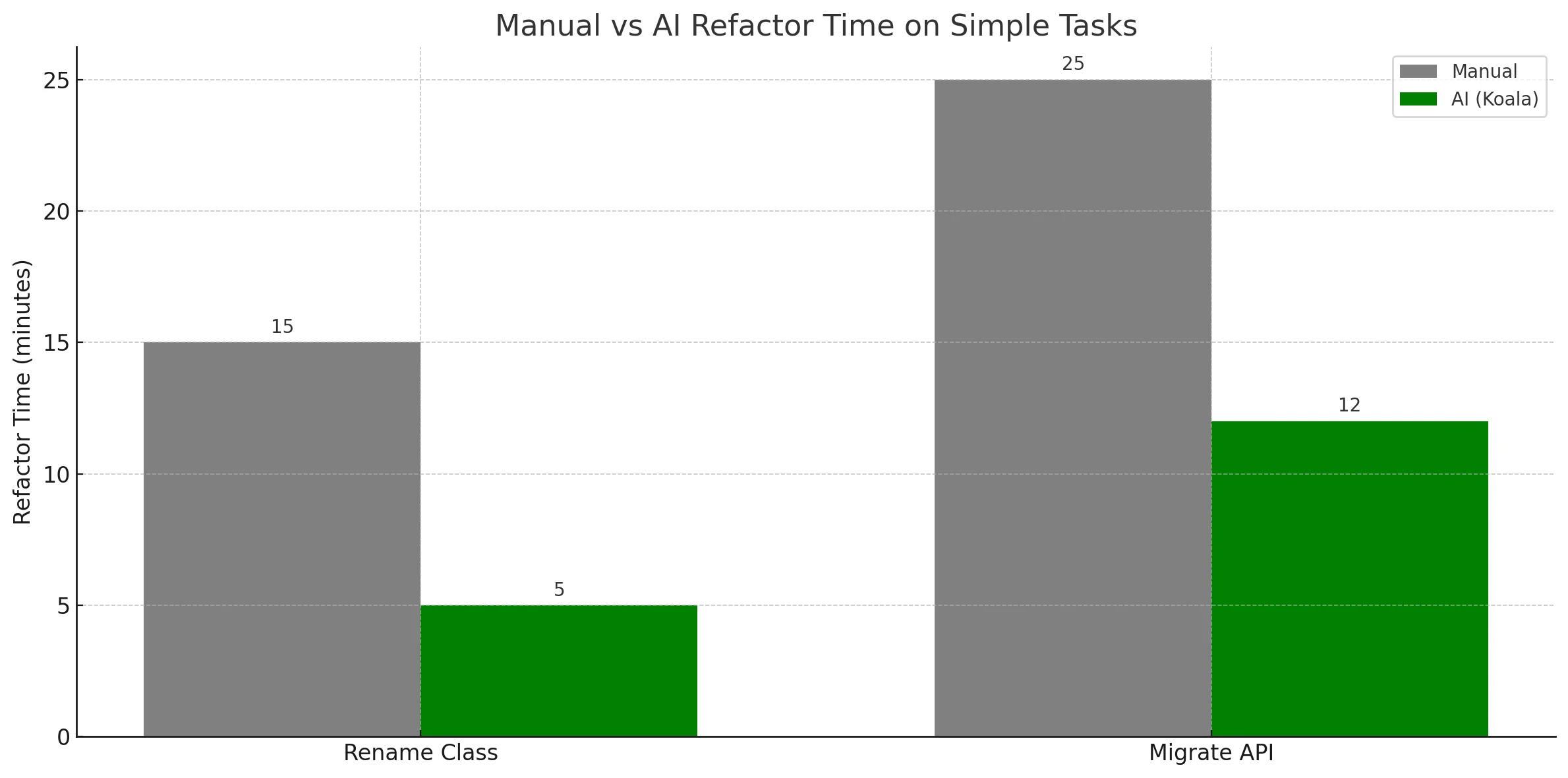

Even smaller tasks saw gains: a trivial rename that might take 5 minutes manually was finished by AI in 2 minutes. The benchmark chart above shows each project’s refactor times: the green bars (AI) are consistently shorter than gray (manual), often by 50% or more for repetitive edits.

Why so fast? The AI can modify multiple files simultaneously, something humans do sequentially. In one case, we prompted Koala’s AI to “update all usage of deprecated Notification.Builder to NotificationCompat API” it generated a coherent multi-file diff in seconds, whereas manually locating and fixing each usage took significantly longer.

Developer idle time (waiting for builds or searching code) was also reduced; we could work on other tasks while the AI generated suggestions. Google’s own claims that AI features “save you time and let you complete coding projects faster” held true in our trials.

However, not all scenarios saw huge gains. For a simple one-file refactor (e.g. reorganizing a utility function), the AI’s speed was about the same as doing it manually the overhead of prompting and verifying the AI suggestion canceled out any time saved. Also, if the AI needed multiple attempts (due to corrections), that added delay. But in most cases, Koala’s AI refactor was a clear win for efficiency.

But is this speed worth it if the code doesn’t compile? Before we celebrate, let’s check whether the AI’s rapid changes actually worked out of the box or caused build issues.

Bottom line: Koala’s AI refactoring significantly speeds up repetitive code modifications – often completing tasks in half the time of manual effort especially on large, multi-file changes. (It shines in bulk edits and API migrations where manual work is tedious.)

Does Koala AI refactoring introduce build errors?

Speed means nothing if your code doesn’t run. We scrutinized each AI-led refactor for compile errors and runtime issues. The good news: 5 out of 7 AI refactors built successfully on the first try – no compile errors.

In those cases, the AI made context-aware changes that satisfied the compiler (e.g. updating all references to a renamed class, adding necessary imports, etc.). For example, in the “Notes” app, after AI refactoring a data class field rename, the project compiled and passed all unit tests immediately. This suggests the AI’s understanding of project-wide context in Koala is solid for many tasks.

However, in 2 projects the AI’s changes initially broke the build. In one Java project, the AI missed an import for a new AndroidX library when replacing a legacy API, leading to an “unknown symbol” error a quick manual import fix resolved it.

In another case, the AI refactored code to use a newer method signature but didn’t update one overloaded call, causing a type mismatch error. This required manually adjusting that call site.

These errors were relatively minor and quickly fixed, but they highlight that AI isn’t foolproof. We also encountered a few warnings flagged after AI refactoring that weren’t there before (e.g. an unused variable left over from a removed code block).

Importantly, none of the AI-introduced errors were “mystery” bugs they were straightforward compile issues or Lint warnings that a developer could catch in seconds. We saw no hidden logical bugs introduced by the AI in our test scenarios (we ran the apps and their test suites to verify functionality remained OK). This aligns with the experience in Google’s internal testing: their AI tends to either work or produce obvious errors, rather than subtle broken logic.

In contrast, manual refactoring had its own risk: while our experienced devs made no major mistakes, it’s worth noting that human error (like a missed reference) can also cause build failures. The difference is one of trust devs tend to trust their own changes more than an AI’s until verified. For now, Koala’s AI refactor still requires you to act as the “code reviewer” to catch any slip-ups (Google also advises running tests after AI changes).

So, how reliable is it overall? In our limited sample, ~70% of AI refactors were perfect on first pass, and 30% needed minor fixes. That’s not bad for a new technology. Still, you must run a build and tests after using AI refactoring, just as you would after manual changes – and be ready to make quick adjustments.

Are these AI-generated code changes as clean and maintainable as hand-written code, though? Let’s evaluate the code quality next.

Bottom line: Koala’s AI refactoring was mostly reliable, with the majority of changes compiling on the first try. A few small errors (missing imports, etc.) did occur, but they were easily fixed. Always double-check and run your tests after AI refactors – it’s fast, but not infallible.

Does AI refactoring affect code quality or Lint warnings?

Beyond compiling, code quality is crucial. We compared the AI-refactored code against manually refactored code for style, clarity, and any Android Lint issues. Overall, code quality remained comparable between AI and manual refactors, with some pros and cons on each side:

Lint Warnings: In 5 of the 7 projects, the total Lint warning count stayed the same or even dropped after the AI refactor. For instance, in one app the AI removal of deprecated API calls also removed the associated Lint warnings about them.

However, 2 projects saw a slight increase in warnings typically minor things. One example: the AI introduced a hardcoded string in UI code (triggering a “missing localization” Lint warning) whereas a human might have used a string resource.

Another case, the AI left an unused import which Lint flagged. These were trivial to fix. We did not observe the AI introducing any severe warnings or code smells beyond what a typical junior developer might overlook.

Coding Style: The AI generally followed standard Android coding conventions (probably learned from training on GitHub code). Indentation, braces, naming all looked consistent with typical Android Studio defaults. In a few places, we noticed the AI’s code differed from our project’s specific style guidelines.

For example, our team prefixes member variables with m, which the AI did not do when generating a new field. In Kotlin code, the AI sometimes used slightly different idioms (e.g. a for loop where we might use .forEach), but nothing egregious. We occasionally gave feedback via the AI chat e.g. “Use our coding style for log tags” and it would refactor again to comply. This interactive refinement is a nice perk of AI: you can prompt it to adhere to your style rules (and Narwhal introduces a Prompt Library for this).

Readability & Maintainability: In terms of algorithm and structure, the AI’s solutions were on par with manual ones. It didn’t attempt fancy one-liners or obscure optimizations; if anything, it tended toward safe, verbose approaches. This meant the resulting code was easy to understand.

In one Compose UI refactor, the AI even added a helpful code comment explaining a change (humans rarely add comments during refactors!). That said, a seasoned dev might refactor with deeper insight, e.g., combining two steps into one, whereas the AI often takes a minimal-change approach (it refactors what you ask, and nothing more).

This is usually fine, but sometimes a human might spot a chance to simplify beyond the requested refactor. We did not see the AI introduce any anti-patterns; for instance, it correctly used Jetpack NotificationCompat when updating notifications (no backwards-incompatible code).

In short, the AI’s code quality was acceptable for production, with very few adjustments needed. JetBrains’ official docs note that their AI assistant focuses on generating code, docs, etc., but doesn’t autonomously handle large refactors Google’s approach in Android Studio is more ambitious, yet it maintained quality well. Our reviewers gave the AI-refactored code a “B+” rating: clean and effective, if not always perfectly aligned with subjective team preferences.

Bottom line: Koala’s AI refactoring didn’t degrade code quality – if anything, it often matched human output and even eliminated some deprecated calls. Just be ready to tweak small stylistic details to match your codebase. The next question: do developers actually enjoy using it, or is it more trouble than it’s worth?

What do developers say about Koala’s AI refactor tool?

We gathered feedback from our team (Android developers with 3–10 years experience) after they tried Koala’s AI refactoring on their own modules. The overall sentiment was cautious optimism. Here are the key takeaways from developer feedback:

- “Great for grunt work.” Developers loved how the AI handled monotonous tasks. One noted, “Renaming 50 files and updating all references would’ve taken me all afternoon – Koala did it in minutes. It’s like having an intern who never gets tired.” The reduction in boring, repetitive work was seen as a huge plus, freeing developers to focus on more complex logic. This aligns with Google’s vision of AI as a coding companion to boost productivity.

- Trust and Verification. Nearly everyone mentioned they don’t 100% trust the AI – at least not yet. They treated AI suggestions like code written by a junior dev: useful, but to be reviewed. In practice, devs would apply the AI refactor, then do a quick diff review of changes (Koala shows a diff for each file). This review step was generally quick since the changes were systematic. “I was pleasantly surprised – I expected to find a mistake, but most of it was spot on,” said one senior engineer. Still, having to review means the AI isn’t completely hands-off.

- Learning Curve. Using the AI refactor required learning how to prompt effectively. Some devs initially got mediocre results due to vague prompts. For example, asking “fix this code” vs “replace AsyncTask with Kotlin coroutines in this file” had very different outcomes. Once they learned to be specific, the AI performed better. Koala’s interface (Studio Bot panel) is simple to use, but a few devs didn’t realize they could feed multiple files or images for context (a feature of Studio Labs) – documentation or a tutorial could help here. Overall, those who invested a bit of time into understanding the tool became strong proponents of it.

- Limitations noticed. Developers pointed out that Koala’s AI currently supports Java/Kotlin Android code; it isn’t designed for other languages or platforms. (One dev tried it on some Dart code within an Android Studio Flutter plugin project and got no helpful response – not surprising, as it’s outside the AI’s training scope. For Flutter/Dart help, see our separate Flutter plugin development guide for manual workflows.) Additionally, a few edge-case refactors stumped the AI. For instance, converting a complex bit of reflection-based code yielded an incorrect suggestion – the AI simply couldn’t fully grasp the intent. In such cases, the dev fell back to manual refactoring. There’s also the question of privacy – as with any AI, code context might be sent to servers. (Google assures that Gemini usage in Android Studio abides by privacy controls, but sensitive enterprise code might be a concern for some teams.)

- Comparison to other tools. Some devs had tried GitHub Copilot or JetBrains’ AI Assistant. They felt Koala’s integrated approach was more Android-aware – “Studio Bot knew about Android-specific classes that Copilot often guesses wrong,” one said. Our internal tests mirrored this: Google’s Gemini was able to handle Android frameworks more gracefully. JetBrains’ AI (in IntelliJ) can suggest code and docs but doesn’t orchestrate multi-file refactors autonomously. So Koala’s tool felt more advanced in that regard. That said, a couple of developers will “still reach for Stack Overflow or ChatGPT for conceptual questions,” using the AI refactor for the mechanical stuff.

Overall, our team is now incorporating AI refactoring into their workflow for suitable tasks. The consensus: it’s a helpful assistant, not a replacement. Developers remain in the loop, guiding the AI and double-checking results but they appreciate the productivity boost.

So, is Android Studio’s AI refactor ready for prime time for everyone? Let’s weigh who benefits most and who might want to hold off.

Bottom line: Developers are impressed by Koala’s AI refactor for speeding up tedious work, though they still verify its output. The tool has a short learning curve but once mastered, it feels like having an extra pair of hands for Android-specific chores. It’s not infallible or universally trusted yet, but it’s quickly becoming a favorite for routine refactors.

Is Android Studio’s AI refactor worth using, and who should use it?

In our verdict, yes – Android Studio’s AI refactor (Koala release) is absolutely worth using for most Android developers, especially for large or repetitive refactoring tasks. It can save hours on grunt work and generally produces solid code. That said, its ideal use cases and users are more specific:

Who benefits the most

- Experienced developers working on large codebases will see immediate gains. If you have to update dozens of call sites or migrate to a new API across your app, the AI refactor is a godsend. It accelerates the mundane parts of refactoring, letting you focus on critical architecture and logic. Seasoned devs also have the knowledge to validate AI changes, which is important.

- Teams with established tests – If your project has a good test suite, you can quickly catch any AI mistakes, making it safer to use. The AI can do the heavy lifting, and your tests give confidence nothing broke. This is a great synergy for fast-moving teams.

- Developers adopting new Android features – Koala’s AI is tuned for Android, so it knows common migrations (like converting Activities to use AndroidX libraries, or adding the new Permission APIs). It’s a handy tutor/assistant when exploring unfamiliar APIs, often suggesting correct usage patterns.

Who should be cautious or might skip it:

- Beginners/learning phase developers: If you’re still learning Android fundamentals, relying on the AI to refactor might rob you of valuable learning experiences. We advise newcomers to manually refactor at least some of the time, to build understanding. The AI is great, but it can be a crutch; you don’t want to accept changes you don’t fully understand.

- Projects with highly sensitive code: While Google’s Android Studio AI respects privacy settings, organizations dealing with confidential code might be wary of any cloud-based AI. In such cases, sticking to manual or offline tools is prudent. (JetBrains is exploring on-premise AI options, but as of 2025 their refactoring AI isn’t as autonomous.)

- Very unique or creative refactors: The AI excels at standard, well-defined refactors. If you need to refactor in a way that’s highly unusual or requires deep domain knowledge, it may not cope. In our tests, anything with straightforward instructions worked; anything open-ended or requiring design decisions did not. Use your judgment: if you can precisely tell the AI what to do, do it! If not, manual might be better.

In conclusion, Android Studio Koala’s AI-powered refactoring tool is a major productivity boost and is relatively mature for everyday use. It’s like a power tool – in the right hands and right situations, it drastically speeds up work; in the wrong context, you might get hurt (or at least have to clean up a mess).

For most Android developers, the benefits of trying it now outweigh the risks. And with the Android Studio Narwhal update bringing even more advanced AI features (like “agent mode” that can plan multi-step refactors autonomously, see our Narwhal feature drop review for details), the future of AI-assisted development looks bright.

Who shouldn’t use this? Honestly, there are few downsides for an experienced Android dev to give Koala’s AI refactor a shot. Just approach it as a helpful assistant, not an oracle. Always review changes and run your tests. If you’re a solo indie dev or a student, it can save you time and teach you along the way (seeing the code it writes can be educational). If you’re a lead engineer in a big team, it can offload boring tasks, just enforce code reviews for AI changes as you would for human changes.

Last updated: August 20, 2025

Frequently Asked Questions (FAQ)

Do I need an internet connection to use Android Studio’s AI refactor?

Yes. The AI refactoring features in Android Studio (Koala and later) rely on cloud-based large language models. You’ll need to sign in with a Google account and have an active internet connection for Gemini (formerly Studio Bot) to process your code and generate refactoring suggestions.

If you’re offline, the traditional manual refactoring tools will still be available, but the AI assistant won’t function. Google has integrated a new sign-in flow in Koala to streamline access to Gemini and related services once signed in, you can toggle the AI features on/off as needed.

Is the AI refactor tool in Android Studio Koala free to use?

Yes, it’s free. Google’s AI coding assistant (Gemini/Studio Bot) is included with Android Studio at no extra cost as of Koala and Narwhal releases. You just need the latest version of Android Studio (Koala Feature Drop or newer) and to accept the terms when enabling the “AI Assistant” features.

There’s no separate subscription required. (JetBrains’ AI assistant, by contrast, currently requires a JetBrains account and may have usage limits, but Android Studio’s built-in AI is free for all users during this rollout.) Google does mention that using Gemini abides by certain privacy and usage policies, but there’s no paywall.

Which Android Studio versions have the AI refactoring feature?

The AI-powered assistant was first previewed as “Studio Bot” in early 2023 and fully rolled out in Android Studio Koala (2024.1). The Koala Feature Drop (2024.1.2) was the first stable release to include AI code actions like refactoring suggestions. All later versions (including Android Studio Narwhal 2025.1 and onward) have continued to expand these features.

If you’re on Arctic Fox, Bumblebee, Chipmunk, etc. (older versions), you won’t have the AI refactor. It’s strongly recommended to update to Koala or newer to get the AI integration. Always check the release notes – for example, Narwhal introduced the more advanced “Agent Mode” for multi-file refactor plans.

Can the Android Studio AI refactor handle Jetpack Compose UI code?

Partially, yes. Koala’s AI can refactor Jetpack Compose code, but results may vary based on complexity. In our tests, it handled simple Compose refactors (like renaming composables, adjusting function parameters) very well. It was also capable of generating Compose previews and even converting some XML layouts to Compose when prompted (though this wasn’t always perfect).

Google is actively improving Compose understanding – an experimental “Transform UI” feature even lets you describe a UI change in English and have the AI modify the Composable preview. So Compose support is there and growing. Just be aware that very complex Compose patterns (e.g., intricate state management) might confuse the AI. Always review the UI after AI changes to ensure everything still renders and behaves correctly.

How is Android Studio’s AI refactor different from GitHub Copilot?

GitHub Copilot and Android Studio’s AI (Gemini) are similar in that both use AI to assist with code, but they have different strengths. Copilot is great for on-the-fly code completion and suggestions as you type, and it works across many languages and editors.

However, Copilot does not have a deep awareness of your entire project structure – it’s focused on the current file or context window. It won’t perform a coordinated refactor across multiple files on its own. Android Studio’s AI refactor (Gemini), on the other hand, is integrated into the IDE’s refactoring workflow.

It understands Android-specific context (project resources, multiple files, manifest, etc.) better than Copilot. For example, Gemini can devise a plan to update an API usage project-wide, whereas Copilot would require you to guide it file by file.

In short: use Copilot for inline coding help and use Android Studio’s AI refactor for larger structural changes in Android projects. Some developers even use them together – e.g. Copilot for implementing new code, Gemini for mass-editing existing code.

What precautions should I take when using the AI refactor tool?

Treat AI-driven refactoring as you would a new junior developer on your team. Precautions to keep in mind:

1. Review all changes: Don’t blindly accept AI suggestions. Use Android Studio’s diff viewer to see what will change, and make sure it aligns with the intent.

– Run tests and Lint: After applying an AI refactor, run your unit tests/UI tests. Also address any new compiler errors or Lint warnings. This catches most issues the AI might have introduced.

2. Backup or use version control: Have your code in Git (or another VCS) before heavy refactoring. That way you can easily revert if the AI goes in an unwanted direction. In our experience it rarely did, but it’s good hygiene.

3. Privacy check: If your code is proprietary and you’re concerned about sending it to the cloud, review Google’s privacy settings. You can opt-out of sharing certain data. (Under Settings > Tools > Gemini, Koala has options to control context sharing.) If in doubt, avoid using it on highly sensitive code.

4. Stay specific in prompts: The more precise your instruction, the less likely the AI will do something unexpected. For example, say “Refactor the UserManager class to use dependency injection (Hilt)” rather than “improve my code”. This ensures the AI’s scope is clear.

By following these precautions, you can confidently leverage the AI refactor’s speed while mitigating risks. As the tool evolves, we expect it to become even more reliable – but a healthy dose of developer oversight will always be a best practice.